AI Log Analysis

Understand how to use AI to analyze logs and automate root cause analysis

AI is inherently good at understanding and analyzing logs. Two properties of log lines make LLMs great at understanding them.

- The majority of log lines come from open source components - Even in proprietary applications, chances are that the log line you're interested in is coming from an open source library. That redis connection failure is likely being thrown by the underlying redis library which you then log.

- Open source components are discussed on the internet - For most errors, people have already ran into the same problem in the past, just in a different context. They've spoken about that error on the internet, likely in a github issue. They've then fixed the issue and posted about what caused it and how to fix it.

That's perfect for AI as LLMs are trained on huge amounts of textual data, the vast majority of it is the public internet. This means that for a huge number of logs, they've already seen the posts and articles that are associated with them in their training data which

If you think about how you understand what a log line means as an engineer, you normally follow these steps:

- Something bad happens in your application, you start looking through the logs for unexpected entries, you find an error log line.

- Grab the log line and search it in google.

- Find forum posts or articles written by other engineers explaining times when they hit this error, why it was caused and how to fix it.

- From there you apply your own knowledge of how your system functions to apply the fix for your own system.

We can take a couple of different approaches to automate this workflow.

Simple Chat Workflows

You can get a lot of the value of LLMs just by automating steps 2 and 3 using your favourite LLM chatbot. Open up Claude or ChatGPT, paste in the log line and off you go. For example, I have an application that is failing to make an api request to a service that I have, I can paste the error into claude.

Claude tells me that the error is that we can't resolve the dns for the host cbtestinga.mooo.com.

However claude doesn't know that this hostname is meant to be for a service in my kubernetes cluster and cbtestinga.mooo.com is a dns entry that should be resolved by the in cluster dns server.

After giving it context about the kubernetes cluster it can give us a much more targeted resolution. At this point we are now integrating step 4 into the chat workflow.

Just doing these steps helps us condense our workflow and resolve the issue sooner but there are still a number of manual steps:

- We still need to figure out what's wrong, find the root cause log line and paste that into the chatbot.

- We still need to give context about our system to the chatbot to help it give a more targetted fix or debugging steps.

What if we wanted to automate these steps too?

Agentic Log Analysis Systems

Agentic Log Analysis Systems aim to automate all steps 1-4 of the log investigation lifecycle.

The simplest solution to this problem would just be to take all log lines emitted by an application, throw them into an LLM and ask if there are any issues that we should be aware of. This would let the LLM itself perform step 1 in the process by simply checking every log line. However, this is impractical in most scenarios because of the sheer volume of log data, most applications emit at least a single log per request and at any sort of scale we start to hit cost constraints. Piping all log data for a single application would run up a bill of tens of thousands of dollars per month.

So we need to be smarter about which log lines we send to the AI. This is where agentic systems come in.

Agentic systems use techniques to automate steps 1 and 4 of the investigation workflow. They reduce the number of log lines sent to the LLM through techniques like locality sensitive hashing of logs to send only unique logs once to LLMs. They automate context injection by gathering detailed information about the runtime of applications, either automatically through discovery or by allowing engineers to pass in docs of how their systems operate.

After deciding whether or not an issue has occurred, they then often allow the LLM to decide if this needs to be investigated by a human or even suggest fixes themselves. It acts like an extra layer of defence against issues.

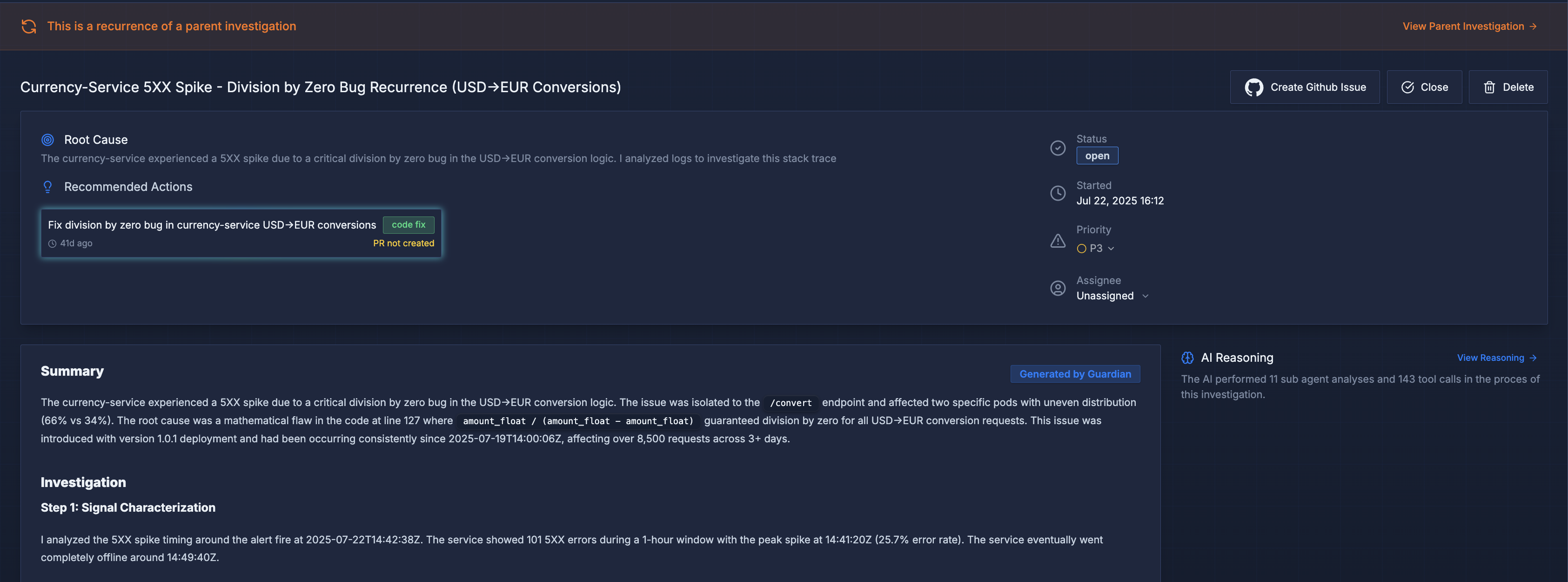

For example, here is the output of an issue investigated by the agentic log analysis system in Metoro.

Conclusion

In conclusion, how you want to use AI to help you investigate logs comes down to your use case, initially, you can just start out with a chatbot, but if you find yourself reaching for something more comprehensive and automated then you can try out an agentic log analysis solution