# AWS Marketplace

Source: https://metoro.io/docs/administration/billing-aws-marketplace

Metoro supports both Stripe (default) and AWS Marketplace for billing. You can swap between the different methods at any time.

## Move to AWS Marketplace from Stripe

When switching from AWS Marketplace to Stripe, you will be immediately charged on Stripe for usage accrued in the current billing cycle. A new billing cycle will start in AWS from the day you switch.

1. Log in to the Metoro account you would like to connect your AWS account to.

2. Make sure you are an admin by heading to [settings](https://us-east.metoro.io/settings) and clicking on the **Users** tab.

3. Connect your AWS account to your Metoro account.

1. Head to [https://aws.amazon.com/marketplace/pp/prodview-4v43fz25vi6ug](https://aws.amazon.com/marketplace/pp/prodview-4v43fz25vi6ug)

2. Click **View purchase options**

3. Click **Subscribe**

4. Switch from Stripe to AWS Marketplace

1. Head into [billing](https://us-east.metoro.io/billing) and click on `Update your billing location`.

2. Select **AWS Marketplace**.

3. Click **Switch to AWS Marketplace billing**

## Move from AWS Marketplace to Stripe

When switching from AWS to Stripe, you will be charged both on AWS and on Stripe. You will be charged for all usage accrued up to the current time on AWS Marketplace.

A new billing cycle will start in Stripe from the day you switch and all subsequent usage will be charged to Stripe

1. Head into [billing](https://us-east.metoro.io/billing) and click on `Update your billing location`.

2. Select **AWS Marketplace**.

3. Click **Switch to AWS Marketplace billing**

## Viewing Metoro usage in the AWS Console

Head to **Cost Explorer** in the AWS Console, you will see a line item for Metoro usage.

Metoro reports usage to AWS Marketplace every hour on the hour however AWS Marketplace may only show this after a day.

## Cancellation

To cancel your Metoro susbscription, [move to Stripe billing](#move-from-aws-marketplace-to-stripe) and then move yourself to the hobby tier by following the instructions at \[/docs/billing-stripe#cancellation]

## Differences in billing

When billing through Stripe, Metoro charges per node-minute, each hour the total number of node minutes is reported to Stripe. At the end of the billing cycle, Stripe charges your card at for the total number of accrued node minutes throughout the month.

When billing through AWS Marketplace, Metoro charges per node-hour due to a limitation in AWS Marketplace, reported every hour. The number of node hours is rounded down to nearest hour. So in the case of 1.1 node hours, Metoro will charge for only 1 node hour.

# Billing

Source: https://metoro.io/docs/administration/billing-overview

Metoro bills on a usage model. The amount charged is based on the number of nodes that Metoro is monitoring.

Metoro records the number of nodes that are being monitored every minute and records the total number of node minutes used every hour.

Every hour, Metoro reports that sends that information to a billing provider. Each billing provider aggregates that usage and charges you at the end of the billing cycle.

All billing cycles in Metoro are monthly unless you are on the Enterprise plan in which case billing cycles are on a case by case basis.

Metoro curently supports two billing providers, go to their respective docs to see more information.

* [Stripe](/administration/billing-stripe)

* [AWS Marketplace](/administration/billing-aws-marketplace)

## Trial Period

All Metoro subscriptions come with a 14-day trial period. This applies regardless of the number of nodes.

## Viewing usage information

To view usage information, you can head to [billing](https://us-east.metoro.io/billing). At the bottom of the page, you will see a table of usage information for the last 14 days.

# Stripe

Source: https://metoro.io/docs/administration/billing-stripe

Stripe is the default billing method for Metoro. If you haven't explicitly changed to something else then you will be useing Stripe.

## Upgrading to the Scale plan

To upgrade to the Scale plan, just click on the Scale plan in [billing](https://us-east.metoro.io/billing).

You will then be taken to stripe where you can enter a credit card to be charged.

Alternatively, you can choose to pay through AWS Marketplace. See instructions [here](/administration/billing-aws-marketplace).

## Updating payment method

To update your payment details:

1. Head to [billing](https://us-east.metoro.io/billing)

2. Click **Update your billing details**. You will then be redirected to Stripe.

3. In stripe you can update your payment method

## Getting previous invoices

To get previous invoices:

1. Head to [billing](https://us-east.metoro.io/billing)

2. Click **Update your billing details**. You will then be redirected to Stripe.

3. In Stripe, you can download any of your last invoices to get an itemised receipt.

## Cancellation

To cancel your Metoro membership, move yourself to the hobby tier by clicking on the plan in [billing](https://us-east.metoro.io/billing).

When you move to the hobby plan, you will immediately be charged for the amount used in the current billing cycle on your existing plan. No further charges will be made.

# On-Premises Installation & Management

Source: https://metoro.io/docs/administration/on-premises

Guide for installing and managing Metoro in an on-premises environment

This guide provides detailed instructions for installing and managing Metoro in an on-premises environment.

It covers system requirements, installation steps, and best practices for maintaining your deployment.

## Prerequisites

Before beginning the installation, ensure your environment meets the following requirements:

* Kubernetes cluster (v1.19 or later)

* Helm 3.x installed

* Resource requirements per node for the Metoro Agent:

* CPU: 0.3 cores

* Memory: 300MB RAM

* Total resource requirements for the Metoro Hub:

* CPU: 4 cores

* Memory: 8GB RAM

* Network requirements:

* Access to quay.io/metoro repositories for pulling images (optional if using your own private registry)

* Internal network connectivity between cluster nodes

* Ingress controller for external access (recommended)

## Quick Start

### 1. Get Access to Required Resources

Contact us to get access to the Helm charts and private image repositories:

* Join our [Community Slack Channel](https://join.slack.com/t/metorocommunity/shared_invite/zt-2makpjl5j-F0WcpGnPcdc8anbNGcewqw)

* Email us at [support@metoro.io](mailto:support@metoro.io)

You will receive:

* Helm repository (zipped)

* Image repository pull secret

### 2. Prepare the Installation

1. Extract the helm chart:

```bash

unzip helm.zip && cd helm

```

2. Set your kubectl context:

```bash

kubectl config use-context CLUSTER_YOU_WANT_TO_INSTALL_INTO

```

### 3. Install Metoro Hub

Install the Metoro hub using Helm:

```bash

helm upgrade --install \

--namespace metoro-hub \

--create-namespace \

metoro ./ \

--set clickhouse.enabled=true \

--set postgresql.enabled=true \

--set onPrem.isOnPrem=true \

--set imagePullSecret.data= \

--set apiserver.replicas=1 \

--set ingester.replicas=1 \

--set temporal.enabled=true \

--set ingester.autoscaling.horizontalPodAutoscaler.enabled=false \

--set apiserver.autoscaling.horizontalPodAutoscaler.enabled=false

```

If the Clickhouse pod remains in pending state, it's likely due to insufficient cluster resources. You can adjust the resource limits in the Clickhouse StatefulSet definition.

### 4. Access the UI

1. Port forward the API server:

```bash

kubectl port-forward -n metoro-hub service/apiserver 8080:80

```

2. Create an account:

* Navigate to [http://localhost:8080](http://localhost:8080)

* Create a new account (do not use SSO options for on-prem installations)

### 5. Install the Metoro Agent

1. After logging in, select "Existing Cluster" and enter your cluster's name

2. Copy the `exporter.secret.bearerToken` value from the installation screen

3. Run the installation command:

```bash

bash -c "$(curl -fsSL http://localhost:8080/install.sh)" -- \

TOKEN_HERE \

http://ingester.metoro-hub.svc.cluster.local/ingest/api/v1/otel \

http://apiserver.metoro-hub.svc.cluster.local/api/v1/exporter \

--existing-cluster \

--on-prem

```

## Advanced Configuration - Production

### Minimal Production Configuration

For the metoro-hub values.yaml:

```yaml

clickhouse:

enabled: true

auth:

password: "CHANGE_ME_CLICKHOUSE_PASSWORD" # Use a random password

postgresql:

enabled: true

auth:

password: "CHANGE_ME_POSTGRES_PASSWORD" # Use a random password

onPrem:

isOnPrem: true

imagePullSecret:

data: "IMAGE_PULL_SECRET"

authSecret:

authMaterial: "CHANGE_ME_AUTH_MATERIAL" # Use a random string

apiserver:

replicas: 2

autoscaling:

horizontalPodAutoscaler:

enabled: false

defaultOnPremAdmin:

email: "YOUR_EMAIL_CHANGE_ME"

password: "YOUR_PASSWORD_CHANGE_ME"

name: "YOUR NAME_CHANGE_ME"

organization: "YOUR_ORGANIZATION_CHANGE_ME"

environmentName: "YOUR_ENVIRONMENT_NAME_CHANGE_ME"

temporal:

enabled: true

server:

config:

persistence:

default:

sql:

password: "CHANGE_ME_POSTGRES_PASSWORD" # Use the same password as the postgres above

visibility:

sql:

password: "CHANGE_ME_POSTGRES_PASSWORD" # Use the same password as the postgres above

ingester:

replicas: 2

autoscaling:

horizontalPodAutoscaler:

enabled: false

```

Then install with the following command:

```bash

helm upgrade --install --namespace metoro-hub --create-namespace metoro ./ -f values.yaml

```

For the metoro-exporter values.yaml:

```yaml

exporter:

image:

tag: "0.841.0"

envVars:

mandatory:

otlpUrl: "http://ingester.metoro-hub.svc.cluster.local/ingest/api/v1/otel"

apiServerUrl: "http://apiserver.metoro-hub.svc.cluster.local/api/v1/exporter"

secret:

externalSecret:

enabled: true

name: "on-prem-default-exporter-token-secret"

secretKey: "token"

nodeAgent:

image:

tag: "0.65.0"

```

Then install with the following command:

```bash

helm repo add metoro-exporter https://metoro-io.github.io/metoro-helm-charts/ ;

helm repo update metoro-exporter;

helm upgrade --install --create-namespace --namespace metoro metoro-exporter metoro-exporter/metoro-exporter -f values.yaml

```

### Securing the Metoro Hub

Before deploying in production, you should change at least the following settings in the Metoro Hub Helm chart:

```yaml

apiserver:

defaultOnPremAdmin:

password: "CHANGE_ME_TO_SECURE_PASSWORD" # Change this to a secure password, you'll use this to log in to the UI for the first time

postgresql:

auth:

password: "CHANGE_ME_POSTGRES_PASSWORD" # Use a random password

clickhouse:

auth:

password: "CHANGE_ME_CLICKHOUSE_PASSWORD" # Use a random password

authSecret:

authMaterial: "CHANGE_ME_AUTH_MATERIAL" # Use a random string

temporal:

server:

config:

persistence:

default:

sql:

password: "CHANGE_ME_POSTGRES_PASSWORD" # Use the same password as above

visibility:

sql:

password: "CHANGE_ME_POSTGRES_PASSWORD" # Use the same password as above

onPrem:

isOnPrem: true

```

### Connecting the exporter to the Metoro Hub via helm

The exporter needs to be configured to connect to the Metoro hub. This can either be done through the UI or by setting the following values in the hub helm chart:

```yaml

apiserver:

defaultOnPremAdmin:

email: "YOUR_EMAIL"

password: "YOUR_PASSWORD"

name: "YOUR NAME"

organization: "YOUR ORGANIZATION"

environmentName: "YOUR ENVIRONMENT NAME"

```

Then when installing the exporter, you can set the following values:

```yaml

exporter:

secret:

externalSecret:

enabled: true

name: "on-prem-default-exporter-token-secret"

secretKey: "token"

```

### Using a different image registry

If you want to use a different image registry, you can set the `imagePullSecret` field in the Helm chart values file to a secret containing the pull secret.

```yaml

imagePullSecret:

name: "my-registry-credentials"

data: "dockerconfigjson-encoded-value"

```

### High Availability Setup

For production environments requiring high availability.

We also recommend using external databases for increased availability and performance. Check out the [external database configuration](#external-database-configuration) section for more details.

The postgres chart doesn't have great support for HA.

The Clickhouse chart has built-in HA support.

```yaml

ingester:

replicas: 2

autoscaling:

horizontalPodAutoscaler:

enabled: true

minReplicas: 2

maxReplicas: 4

targetCPUUtilizationPercentage: 60

apiserver:

replicas: 2

autoscaling:

horizontalPodAutoscaler:

enabled: true

minReplicas: 2

maxReplicas: 4

targetCPUUtilizationPercentage: 60

clickhouse:

enabled: true

persistence:

size: 100Gi

replicaCount: 3

postgresql:

enabled: true

persistence:

size: 20Gi

primary:

replicaCount: 3

```

### External Database Configuration

To use external databases instead of the built-in ones:

```yaml

clickhouse:

enabled: false

clickhouseSecret:

name: "clickhouse-secret"

clickhouseUrl: "clickhouse://xxxxxxx.us-east-1.aws.clickhouse.cloud:9440"

clickhouseUser: "username"

clickhousePassword: "password"

clickhouseDatabase: "metoro"

postgresql:

enabled: false

postgresSecret:

name: "postgres-secret"

postgresHost: "prod-us-east.cluster-xxxxxxx.us-east-1.rds.amazonaws.com"

postgresPort: "5432"

postgresUser: "postgres"

postgresPassword: "password"

postgresDatabase: "metoro"

# This needs to be matched with the postgresSecret values

temporal:

server:

config:

persistence:

default:

driver: sql

sql:

driver: postgres12

database: temporal

user: postgres

password: password

host: "prod-us-east.cluster-xxxxxxx.us-east-1.rds.amazonaws.com"

port: 5432

visibility:

driver: sql

sql:

driver: postgres12

database: temporal_visibility

user: postgres

password: CHANGE_ME

host: "prod-us-east.cluster-xxxxxxx.us-east-1.rds.amazonaws.com"

port: 5432

```

### Ingress Configuration

Enable ingress for external access:

```yaml

apiserver:

# Match this with the hostname of the ingress

deploymentUrl: http(s)://metoro.yourdomain.com

ingress:

enabled: true

className: "nginx"

annotations:

kubernetes.io/ingress.class: nginx

cert-manager.io/cluster-issuer: "letsencrypt-prod"

hosts:

- host: "metoro.yourdomain.com"

paths:

- path: /

pathType: Prefix

tls:

- secretName: metoro-tls

hosts:

- metoro.yourdomain.com

ingester:

ingress:

enabled: true

className: "nginx"

annotations:

kubernetes.io/ingress.class: nginx

cert-manager.io/cluster-issuer: "letsencrypt-prod"

hosts:

- host: "ingest.metoro.yourdomain.com"

paths:

- path: /

pathType: Prefix

tls:

- secretName: metoro-ingester-tls

hosts:

- ingest.metoro.yourdomain.com

```

## Maintenance

### Upgrading Metoro

Minor version upgrades can just be installed using a helm upgrade command.

```bash

helm upgrade --install --namespace metoro-hub ./ -f values.yaml

```

Major version upgrades will require a more in-depth migration process. Each major release will have a migration guide available on the Metoro website and in the helm chart itself.

## Support and Resources

For additional support:

* Reach out to us directly via your dedicated slack connect channel

* Join our [Slack community](https://join.slack.com/t/metorocommunity/shared_invite/zt-2makpjl5j-F0WcpGnPcdc8anbNGcewqw)

* Contact us at [support@metoro.io](mailto:support@metoro.io)

* Live chat via intercom on [metoro.io](https://metoro.io) (bottom right of the page)

## Configuration Reference

| Key | Type | Default | Description |

| --------------------------------------------------------------------------- | ------------- | ------------------------------------------- | --------------------------------------------------- |

| apiserver.defaultOnPremAdmin.email | string | "[admin@metoro.io](mailto:admin@metoro.io)" | Default admin email address set up on first login |

| apiserver.defaultOnPremAdmin.environmentName | string | "Default Environment" | Default environment name set up on first login |

| apiserver.defaultOnPremAdmin.name | string | "Admin" | Default admin name set up on first login |

| apiserver.defaultOnPremAdmin.organization | string | "Default Organization" | Default organization name set up on first login |

| apiserver.defaultOnPremAdmin.password | string | "admin123" | Default admin password set up on first login |

| apiserver.defaultOnPremAdmin.serviceAccount.annotations | object | `{}` | Service account annotations |

| apiserver.defaultOnPremAdmin.serviceAccount.create | boolean | `true` | Whether to create a service account |

| apiserver.deploymentUrl | string | `"https://somedeploymenturl.tld..."` | Deployment URL for the API server |

| apiserver.image.pullPolicy | string | `"IfNotPresent"` | Image pull policy for API server container |

| apiserver.image.repository | string | `"quay.io/metoro/metoro-apiserver"` | Docker image repository for API server |

| apiserver.ingress.annotations | object | `{"kubernetes.io/ingress.class": "nginx"}` | Ingress annotations for API server |

| apiserver.ingress.className | string | `"nginx"` | Ingress class name for API server |

| apiserver.ingress.enabled | boolean | `false` | Enable/disable ingress for API server |

| apiserver.ingress.hosts\[0].host | string | `"api.local.test"` | Ingress hostname for API server |

| apiserver.ingress.hosts\[0].paths\[0].path | string | `"/"` | Path for ingress rule |

| apiserver.ingress.hosts\[0].paths\[0].pathType | string | `"Prefix"` | Path type for ingress rule |

| apiserver.name | string | "apiserver" | Name of the API server component |

| apiserver.replicas | integer | 4 | Number of API server replicas |

| apiserver.resources.limits.cpu | string/number | 4 | CPU resource limit for API server |

| apiserver.resources.limits.memory | string | "16Gi" | Memory resource limit for API server |

| apiserver.resources.requests.cpu | string/number | 1 | Requested CPU resources for API server |

| apiserver.resources.requests.memory | string | "2Gi" | Requested memory resources for API server |

| apiserver.service.name | string | "apiserver" | Name of the API server service |

| apiserver.service.port | integer | 80 | Service port for API server |

| apiserver.service.targetPort | integer | 8080 | Target port for API server service |

| apiserver.service.type | string | "ClusterIP" | Kubernetes service type for API server |

| authSecret.authMaterial | string | "SOME\_AUTH\_MATERIAL" | Authentication material used to sign JWTs |

| authSecret.name | string | "auth-secret" | Name of the authentication secret |

| clickhouse.containerPorts.tcp | integer | 9440 | TCP container port for in-cluster ClickHouse |

| clickhouse.containerPorts.tcpSecure | integer | 20434 | Secure TCP container port for in-cluster ClickHouse |

| clickhouse.enabled | boolean | false | Enable/disable in-cluster ClickHouse installation |

| clickhouse.persistence.size | string | "100Gi" | Storage size for in-cluster ClickHouse |

| clickhouse.replicaCount | integer | 1 | Number of ClickHouse replicas |

| clickhouse.resourcesPreset | string | "2xlarge" | Resource preset for ClickHouse |

| clickhouse.secret.clickhouseDatabase | string | "SOME\_CLICKHOUSE\_DATABASE" | ClickHouse database name |

| clickhouse.secret.clickhousePassword | string | "SOME\_CLICKHOUSE\_PASSWORD" | ClickHouse password |

| clickhouse.secret.clickhouseUrl | string | "SOME\_CLICKHOUSE\_HOST" | ClickHouse URL |

| clickhouse.secret.clickhouseUser | string | "SOME\_CLICKHOUSE\_USER" | ClickHouse user |

| clickhouse.secret.name | string | "clickhouse-secret" | Name of the ClickHouse secret |

| clickhouse.service.ports.tcp | integer | 9440 | TCP port for ClickHouse |

| clickhouse.service.ports.tcpSecure | integer | 20434 | Secure TCP port for ClickHouse |

| clickhouse.shards | integer | 1 | Number of ClickHouse shards |

| clickhouse.zookeeper.enabled | boolean | false | Enable/disable ZooKeeper for ClickHouse |

| environment | string | "none" | Environment for the deployment |

| imagePullSecret.data | string | "SOME\_DOCKERHUB\_CREDENTIAL" | Registry credentials in dockerconfigjson format |

| imagePullSecret.name | string | "dockerhub-credentials" | Name of the Docker registry credentials secret |

| ingester.autoscaling.horizontalPodAutoscaler.enabled | boolean | true | Enable/disable HPA for ingester |

| ingester.autoscaling.horizontalPodAutoscaler.maxReplicas | integer | 10 | Maximum number of replicas for HPA |

| ingester.autoscaling.horizontalPodAutoscaler.minReplicas | integer | 4 | Minimum number of replicas for HPA |

| ingester.autoscaling.horizontalPodAutoscaler.name | string | "metoro-ingester-hpa" | Name of the HPA |

| ingester.autoscaling.horizontalPodAutoscaler.targetCPUUtilizationPercentage | integer | 60 | Target CPU utilization percentage |

| ingester.configMap.name | string | "ingester-config" | Name of the ingester ConfigMap |

| ingester.image.pullPolicy | string | "IfNotPresent" | Image pull policy for ingester container |

| ingester.image.repository | string | "quay.io/metoro/metoro-ingester" | Docker image repository for ingester |

| ingester.name | string | "ingester" | Name of the ingester component |

| ingester.replicas | integer | 4 | Number of ingester replicas |

| ingester.resources.limits.cpu | string/number | 4 | CPU resource limit for ingester |

| ingester.resources.limits.memory | string | "16Gi" | Memory resource limit for ingester |

| ingester.resources.requests.cpu | string/number | 1 | Requested CPU resources for ingester |

| ingester.resources.requests.memory | string | "2Gi" | Requested memory resources for ingester |

| onPrem.isOnPrem | boolean | false | Flag for on-premises deployment |

| postgresql.enabled | boolean | false | Enable/disable in-cluster PostgreSQL |

| postgresql.auth.postgresPassword | string | "CHANGE\_ME" | PostgreSQL password |

| postgresql.persistence.size | string | "2Gi" | Storage size for PostgreSQL |

| temporal.enabled | boolean | false | Enable/disable Temporal |

| temporal.server.replicaCount | integer | 1 | Number of Temporal server replicas |

| versions.onprem.apiserver | string | "0.856.0" | API server version |

| versions.onprem.ingester | string | "0.856.0" | Ingester version |

# Alerts Overview

Source: https://metoro.io/docs/alerts-monitoring/alerts-overview

Set up alerts and monitoring in Metoro

Metoro provides comprehensive alerting and monitoring capabilities to help you stay on top of your Kubernetes infrastructure and applications. You can set up alerts based on various metrics, logs, traces and details/configuration of your kubernetes resources to get notified when something needs attention.

## Alert Types

Metoro supports several types of alerts:

1. **Metric Alerts**: Monitor any metric collected by Metoro, including:

* CPU and memory usage

* Network traffic

* Custom metrics from your applications

* Container metrics

* Node metrics

2. **Log Alerts**: Set up alerts based on log patterns or frequencies:

* Error frequency

* Specific log patterns using regex (re2 format)

* Log volume anomalies with log attribute filtering

* Custom log queries

3. **Trace Alerts**: Monitor your application's performance:

* Latency thresholds

* Error rates

* Request volume

* Service dependencies

4. **Kubernetes Resource Alerts**: Monitor the state of your Kubernetes resources:

* Pod status (e.g., CrashLoopBackOff, Pending)

* Number of replicas

* Resource limits and requests

## Managing Alerts

There are two ways to manage your alerts in Metoro:

1. Using the **Alerts page** in the Metoro UI.

* You can create, edit, and delete alerts directly from the UI.

* The UI provides a user-friendly interface for configuring alert conditions and notifications.

2. Defining your alerts in a Kubernetes ConfigMap.

* This is useful for version control and managing alerts as code.

* Every hour, Metoro will check for changes in the ConfigMap and update the alerts accordingly.

## Alert Resolution

When an alert is triggered:

1. The alert status changes to "Firing"

2. Notifications are sent to configured destinations (if not muted)

3. The alert remains active until:

* The condition returns to normal

* The alert is deleted

# Creating/Updating an Alert

Source: https://metoro.io/docs/alerts-monitoring/create_alert

Set up alerts in Metoro

# Creating and Managing Alerts in Metoro

Metoro provides two methods for creating and managing alerts:

1. Using the Metoro UI

2. Using Kubernetes ConfigMaps

## Managing Alerts Using the UI

To create a new alert using the Metoro UI:

1. Navigate to the [Alerts page](https://us-east.metoro.io/alerts)

2. Click on the **Create Alert** button

3. Configure your alert conditions. For more information about alert configuration, please check the [API documentation](https://metoro.io/docs/api-reference/alerts/createupdate-alert#body-alert-metadata).

You can update existing alerts by clicking on the alert name in the list. This will take you to the alert details page.

You can click `Edit` to modify alert configuration.

You need 'update' permissions on alerts to edit an alert. If you don't have these permissions, the `Edit` button will be disabled.

## Managing Alerts Using Kubernetes ConfigMaps

For organizations that prefer Infrastructure as Code (IaC) practices, Metoro supports creating and managing alerts using Kubernetes ConfigMaps.

### ConfigMap Requirements

1. ConfigMaps must include the label `metoro.io/alert: "true"` to be recognized by Metoro as an alert definition.

* ConfigMaps without this label will be ignored by Metoro.

2. The ConfigMap data must include a key named `alert.yaml` with `alerts` and the toplevel key containing the alert definition(s).

3. The alert definition must follow the format specified in the [API documentation](https://metoro.io/docs/api-reference/alerts/createupdate-alert#body-alert-metadata).

### Alert Synchronization

* Alerts defined in ConfigMaps are automatically synced with Metoro **once every hour**.

* Alerts managed via ConfigMaps cannot be edited through the UI to prevent conflicts.

* Any changes made via the API would be overwritten during the next sync cycle.

* If you delete a ConfigMap, the corresponding alert will *not* be deleted in Metoro. This is to prevent cluster outages deleting alerts etc. After removing the configmap you should subsequently delete the alert in the UI. Subsequent syncs will not recreate the alert.

### Manual Synchronization

If you don't want to wait for the hourly sync, you can:

* Use the "Sync Alerts" button on the Alerts page in the Metoro UI

* Call the endpoint `GET "/api/v1/alerts/sync"` with your auth token. For more information, please check the [API documentation](https://metoro.io/docs/api-reference/alerts/sync-alerts-from-configmaps).

### Example ConfigMap

Please check the API documentation for the full alert definition format. Below is a simple example of a ConfigMap that defines an alert:

You have to provide an unique id for the alert to ensure that the alert can be referenced in the future.

```yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: alert-config

labels:

metoro.io/alert: "true"

data:

alert.yaml: |

alerts:

- metadata:

id: "trace-count-alert-001"

name: "Trace Count Upper Bound"

description: "Alert when there are too many traces"

type: timeseries

timeseries:

expression:

metoroQLTimeseries:

query: "count(traces)"

bucketSize: 60

evaluationRules:

- name: critical

type: static

static:

operators:

- operator: greaterThan

threshold: 15

persistenceSettings:

datapointsToAlarm: 3

datapointsInEvaluationWindow: 5

missingDatapointBehavior: notBreaching

```

For more examples, please check the [Example Alerts](https://metoro.io/docs/alerts-monitoring/example_alerts) section.

### Troubleshooting ConfigMap Alert Synchronization

If you don't see your alerts being created or updated from ConfigMaps, the issue is most likely due to one of the following reasons:

1. **Ingestion Delay**: Allow at least one full minute for your new ConfigMap definition to be ingested by Metoro. After this period, try to sync alerts manually either via the UI or the API.

2. **Missing or Incorrect Labels**: Double-check that your ConfigMap has the necessary label `metoro.io/alert: "true"` as described in the [ConfigMap Requirements](#configmap-requirements) section.

**Invalid Alert Definition**: If your alert is still not appearing, the most likely cause is an invalid alert definition format. In this case, Metoro will log an error in your account. You can find these error logs by filtering for:

* Environment: `metoro-internal`

* Service name: `configmap-to-alert`

These logs will contain the specific reason why your alert definition could not be processed.

# Alert Examples

Source: https://metoro.io/docs/alerts-monitoring/example_alerts

List of example alerts

Here are some example alerts you can set up in Metoro to monitor your Kubernetes infrastructure and applications. These examples cover various scenarios, including CPU usage, error rates, and latency.

If you are using Kubernetes ConfigMaps to manage your alerts, you can define these alerts in a ConfigMap and apply it to your cluster. Make sure to include the label `metoro.io/alert: "true"` in your ConfigMap.

## Complete Example ConfigMap

You can use the following ConfigMap to set up all example alerts at once:

```yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: alert-config

labels:

metoro.io/alert: "true"

data:

alert.yaml: |

alerts:

- metadata:

id: "cpu-usage-alert-001"

name: "High CPU Usage"

description: "Alert when CPU usage exceeds 80% for 5 minutes"

type: timeseries

timeseries:

expression:

metoroQLTimeseries:

query: sum(container_resources_cpu_usage_seconds_total{service_name="/k8s/default/myimportantservice"}) / 60 / sum(container_resources_cpu_limit_cores{service_name="/k8s/default/myimportantservice"})

bucketSize: 60

evaluationRules:

- name: "warning"

type: static

static:

operators:

- operator: greaterThan

threshold: 80

persistenceSettings:

datapointsToAlarm: 5

datapointsInEvaluationWindow: 5

missingDatapointBehavior: notBreaching

- metadata:

id: "error-log-alert-001"

name: "High Error Rate"

description: "Alert when error logs exceed 100 in 15 minutes"

type: timeseries

timeseries:

expression:

metoroQLTimeseries:

query: count(logs{log_level="error"})

bucketSize: 60

evaluationRules:

- name: "critical"

type: static

static:

operators:

- operator: greaterThan

threshold: 100

persistenceSettings:

datapointsToAlarm: 15

datapointsInEvaluationWindow: 15

missingDatapointBehavior: notBreaching

- metadata:

id: "high-latency-alert-001"

name: "High Latency"

description: "Alert when HTTP request duration exceeds 2 seconds for 5 minutes"

type: timeseries

timeseries:

expression:

metoroQLTimeseries:

query: trace_duration_quantile(0.99, traces)

bucketSize: 60

evaluationRules:

- name: "warning"

type: static

static:

operators:

- operator: greaterThan

threshold: 2

persistenceSettings:

datapointsToAlarm: 5

datapointsInEvaluationWindow: 5

missingDatapointBehavior: notBreaching

- metadata:

id: "latency-with-notifications-001"

name: "High Latency with Notifications"

description: "Alert when HTTP request duration exceeds 2 seconds for 5 minutes with notifications"

type: timeseries

timeseries:

expression:

metoroQLTimeseries:

query: trace_duration_quantile(0.99, traces)

bucketSize: 60

evaluationRules:

- name: "Warning"

type: static

static:

operators:

- operator: greaterThan

threshold: 2

persistenceSettings:

datapointsToAlarm: 5

datapointsInEvaluationWindow: 5

missingDatapointBehavior: notBreaching

actions:

- type: slack

slackDestination:

channel: "alerts-critical"

additionalMessage: "Service availability has dropped below SLA threshold!"

- type: email

emailDestination:

emails:

- "oncall@example.com"

- "sre-team@example.com"

```

# Create/Update Alert

Source: https://metoro.io/docs/api-reference/alerts/createupdate-alert

api-reference/openapi.yaml post /alerts/update

Create a new alert based on the provided configuration or update an existing one if the alert.metadata.id matches an existing alert.

# Sync Alerts From ConfigMaps

Source: https://metoro.io/docs/api-reference/alerts/sync-alerts-from-configmaps

api-reference/openapi.yaml get /alerts/sync

Trigger immediate synchronization of alerts from Kubernetes ConfigMaps. By default, alerts are synced from ConfigMaps once every hour. This endpoint allows triggering the sync process manually without waiting for the scheduled sync.

# Product Updates

Source: https://metoro.io/docs/changelog/overview

New updates and improvements

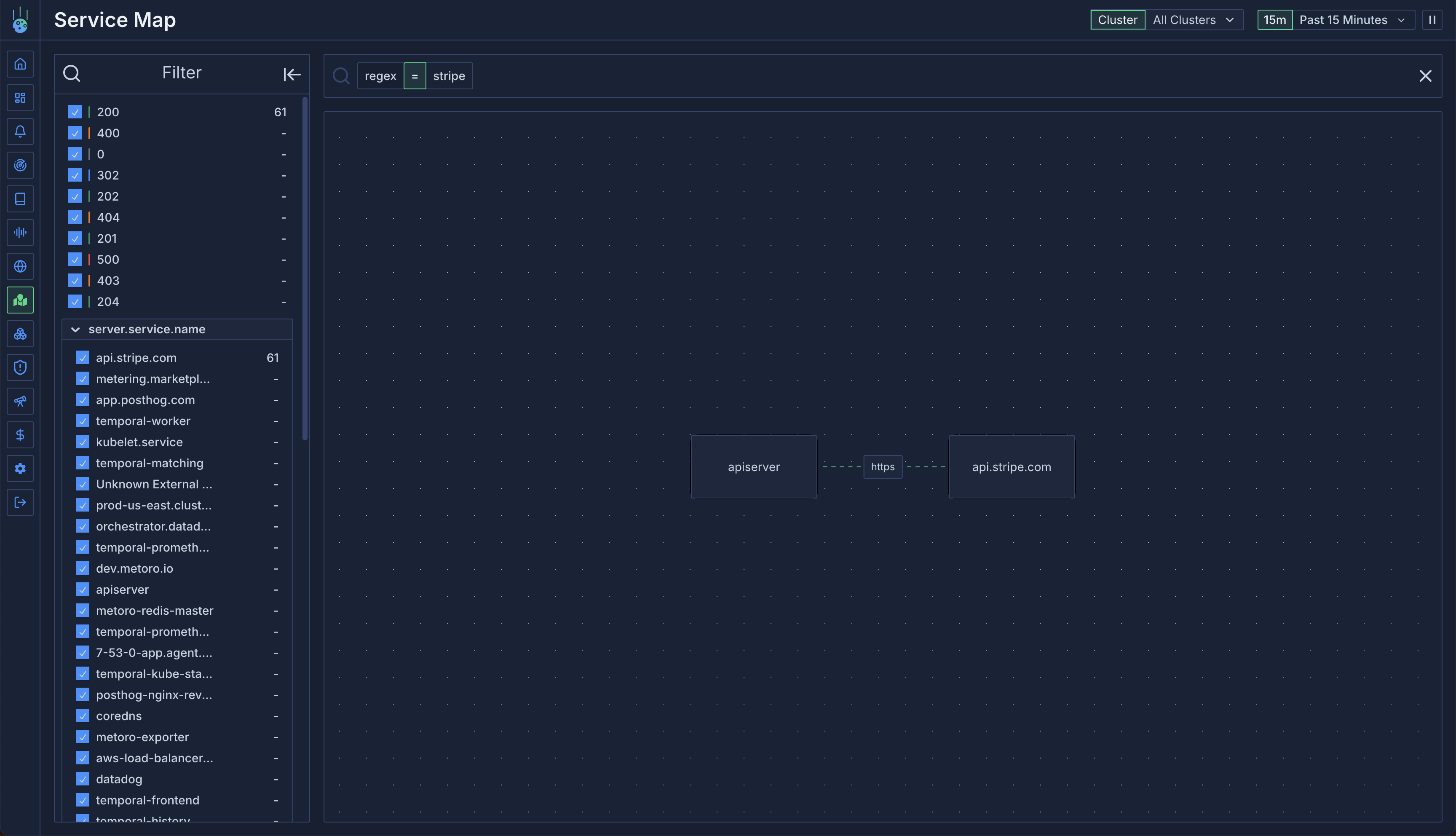

## Enhanced Search & Filtering

Power up your observability with advanced regex search capabilities:

* Regex search support across all resource types:

* Logs: Search through log messages and attributes

* Traces: Filter spans by attributes and service names

* Metrics: Search metric names and label values

* Kubernetes: Find resources using regex patterns

## New Visualization Features

* **Area Charts**: Visualize data trends with beautiful area graphs

* **Histogram Support**: Better understand data distribution with histogram metrics

* Native support for histogram metric types

* Visualize distribution of values over time

* Perfect for latency and request duration analysis

## Role-Based Access Control (RBAC)

Granular access control for your organization:

* Fine-grained permissions for different resource types

* Built-in admin and user roles

* Custom role creation with specific permissions

* Resource-level access management

* [Learn more about RBAC](/user-management/overview)

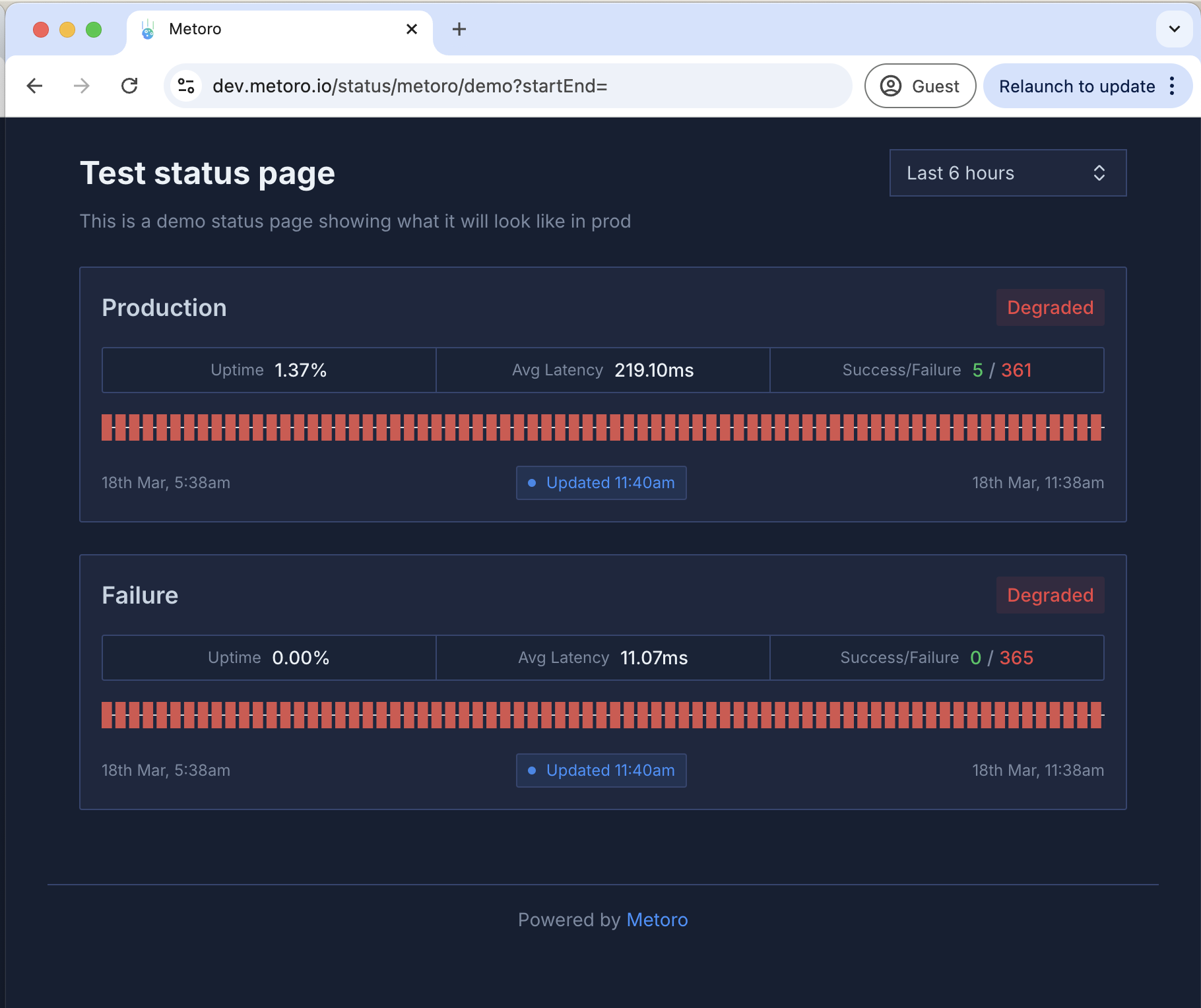

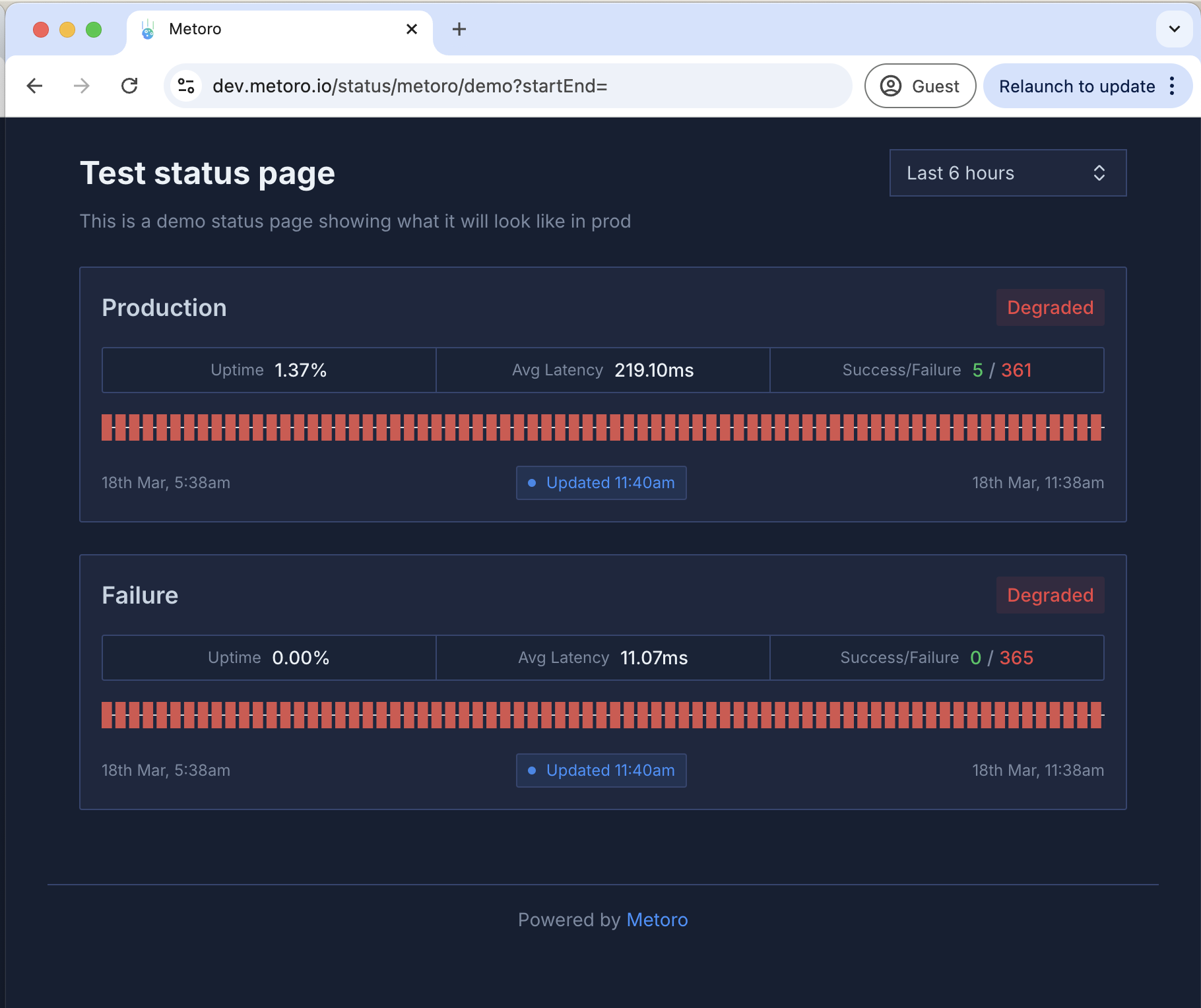

## Uptime Monitoring & Status Pages

Introducing comprehensive uptime monitoring and status page capabilities:

* Monitor endpoint availability and performance:

* Custom check intervals

* Create status pages:

* Historical uptime metrics

* Public and private pages

* Detailed performance metrics:

* Response times

* Availability percentages

* Historical data

* [Learn more about Uptime Monitoring](/uptime-monitoring/overview)

## Advanced Log Analytics & Metrics

Transform your logs into powerful metrics with our new log analytics features:

* Convert any log into metrics with support for JSON value filters and regex matching

* Chart log-based metrics directly in your dashboards

* Create sophisticated alerts combining multiple log patterns and conditions

## Enhanced Plotting and Alerting Capabilities

Take your alerting to the next level with multi-metric alerts and complex formulas:

* Combine multiple metrics for more meaningful alerts

* Create alerts based on metric ratios (e.g., error rate = 5XX / total traces \* 100)

* Use advanced formulas with support for:

* Arithmetic: `+`, `-`, `*`, `/`, `%`, `^`, `**`

* Comparison: `==`, `!=`, `<`, `>`, `<=`, `>=`

* Logical: `not`, `!`, `and`, `&&`, `or`, `||`

* Conditional: `?:` ternary operator

* Import and export alerts in JSON format

## New Visualization Options

* **Stat/Table Widget**: Display single values or tables instead of time series

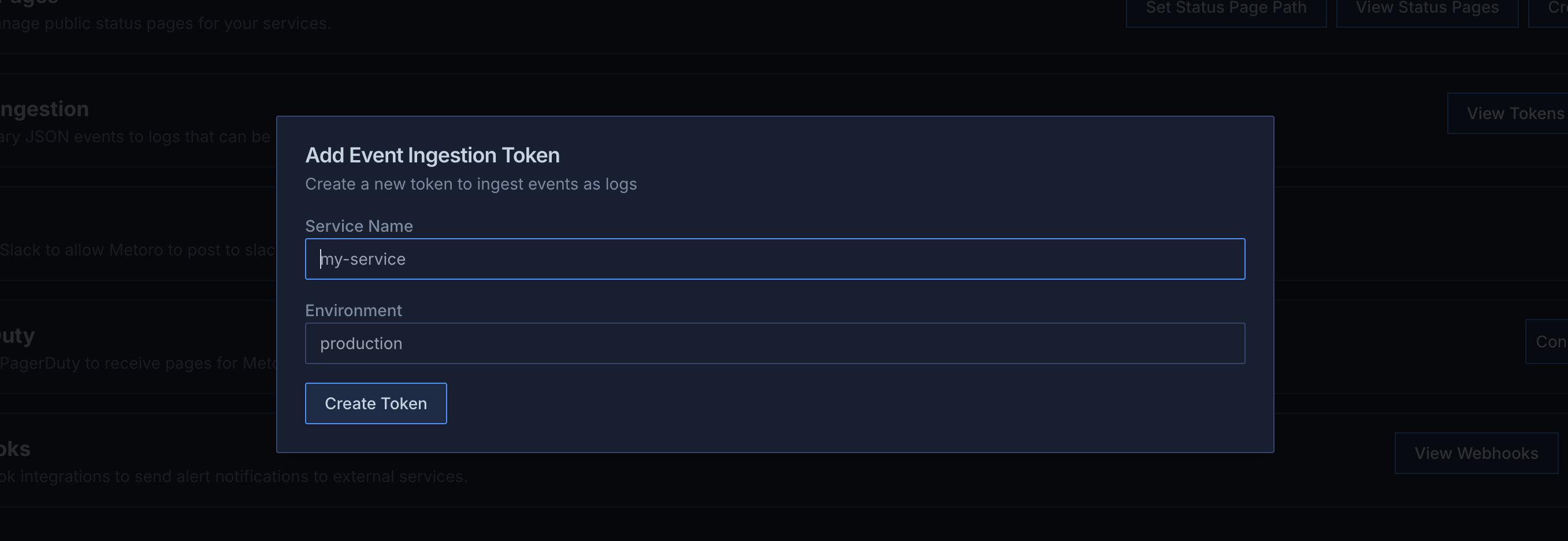

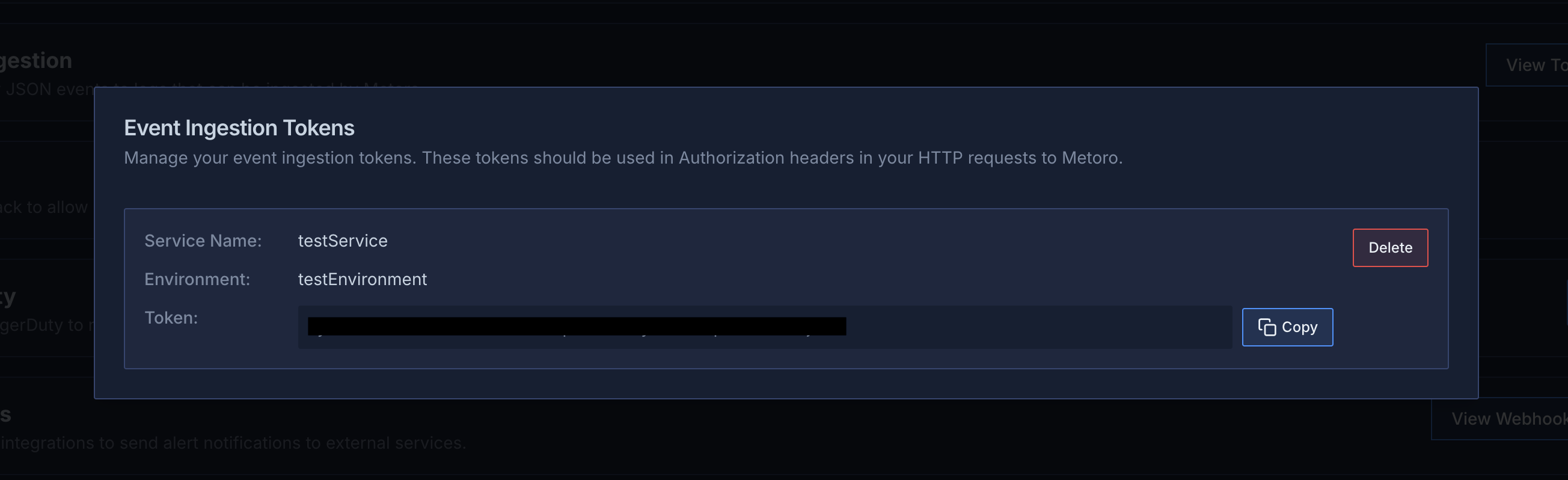

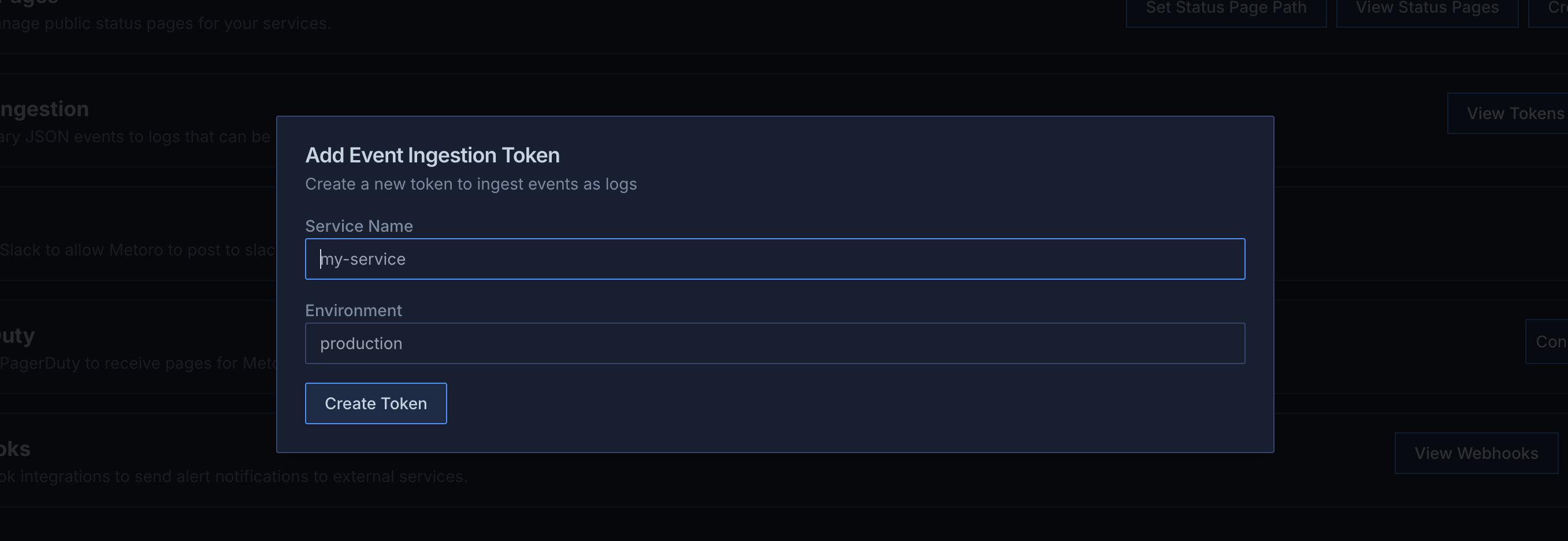

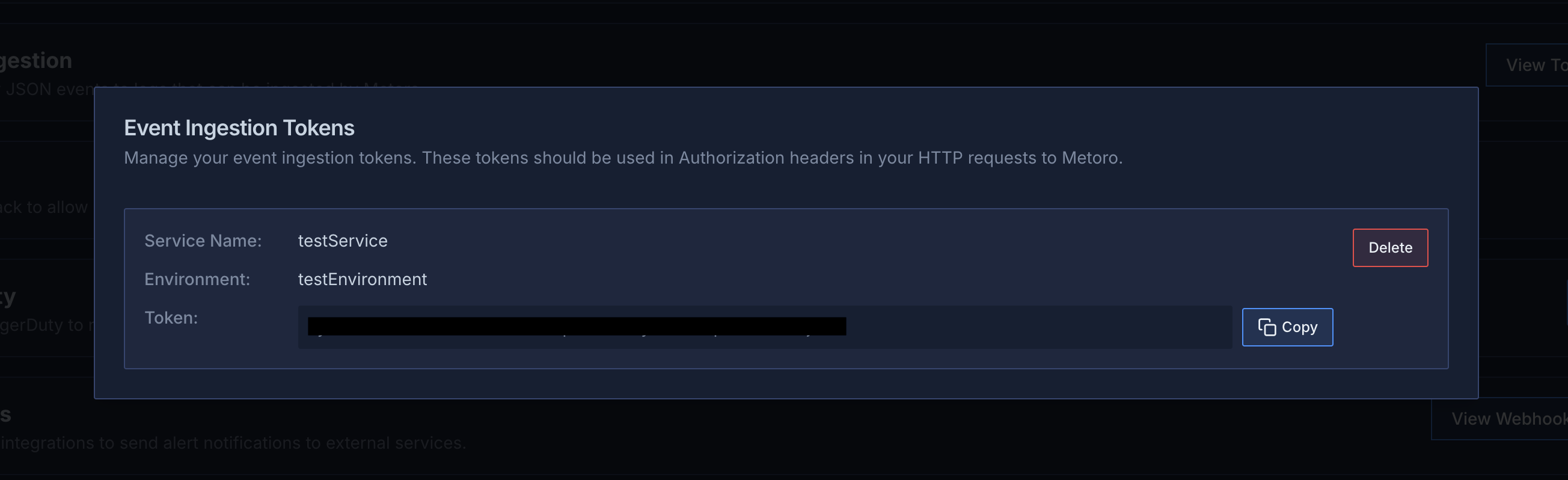

## Event Ingestion API

* New hosted ingestion endpoint for custom events

* Secure token-based authentication

* Automatic conversion of events to queryable logs

* [Learn more about event ingestion](/integrations/event-ingestion)

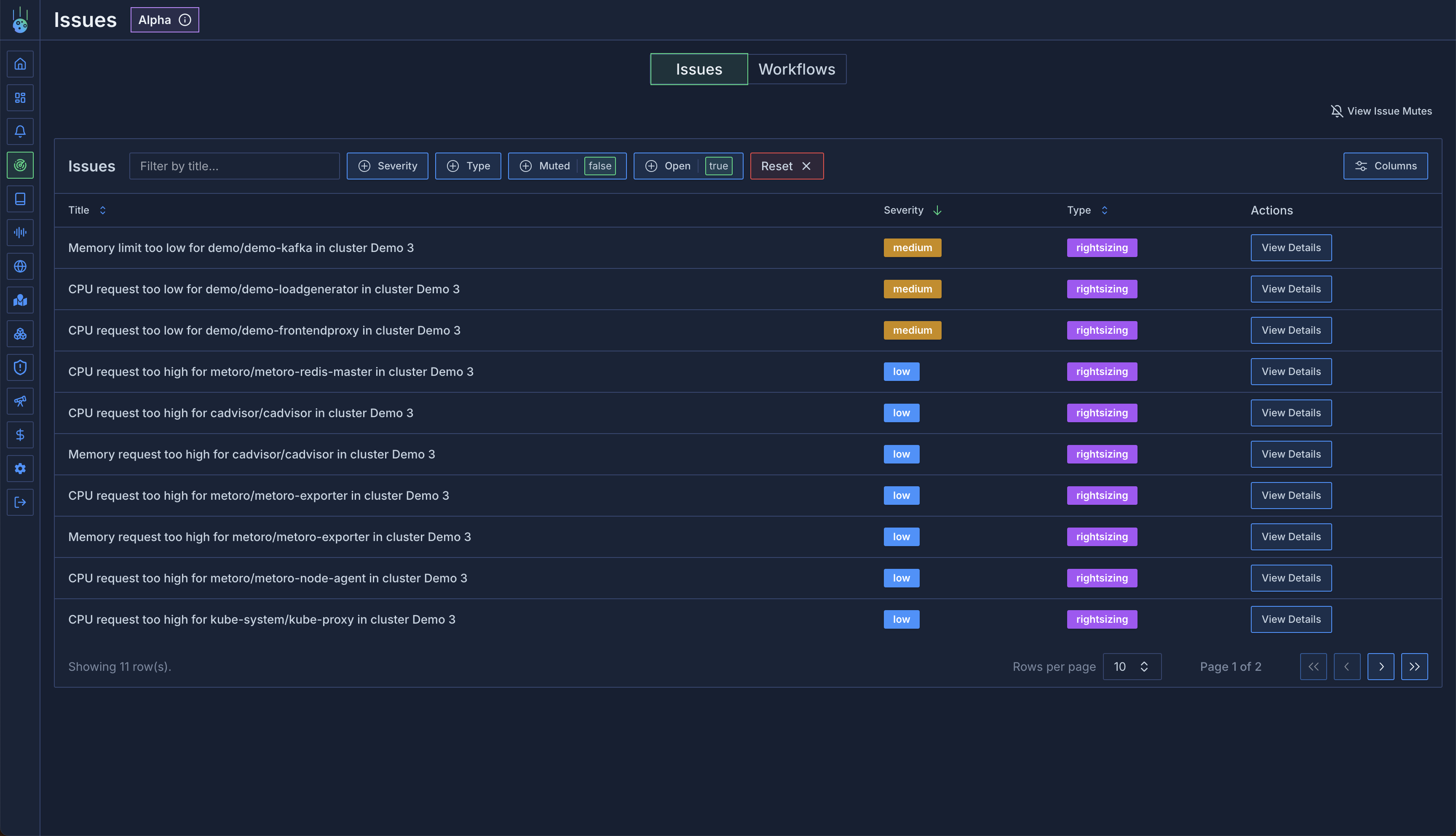

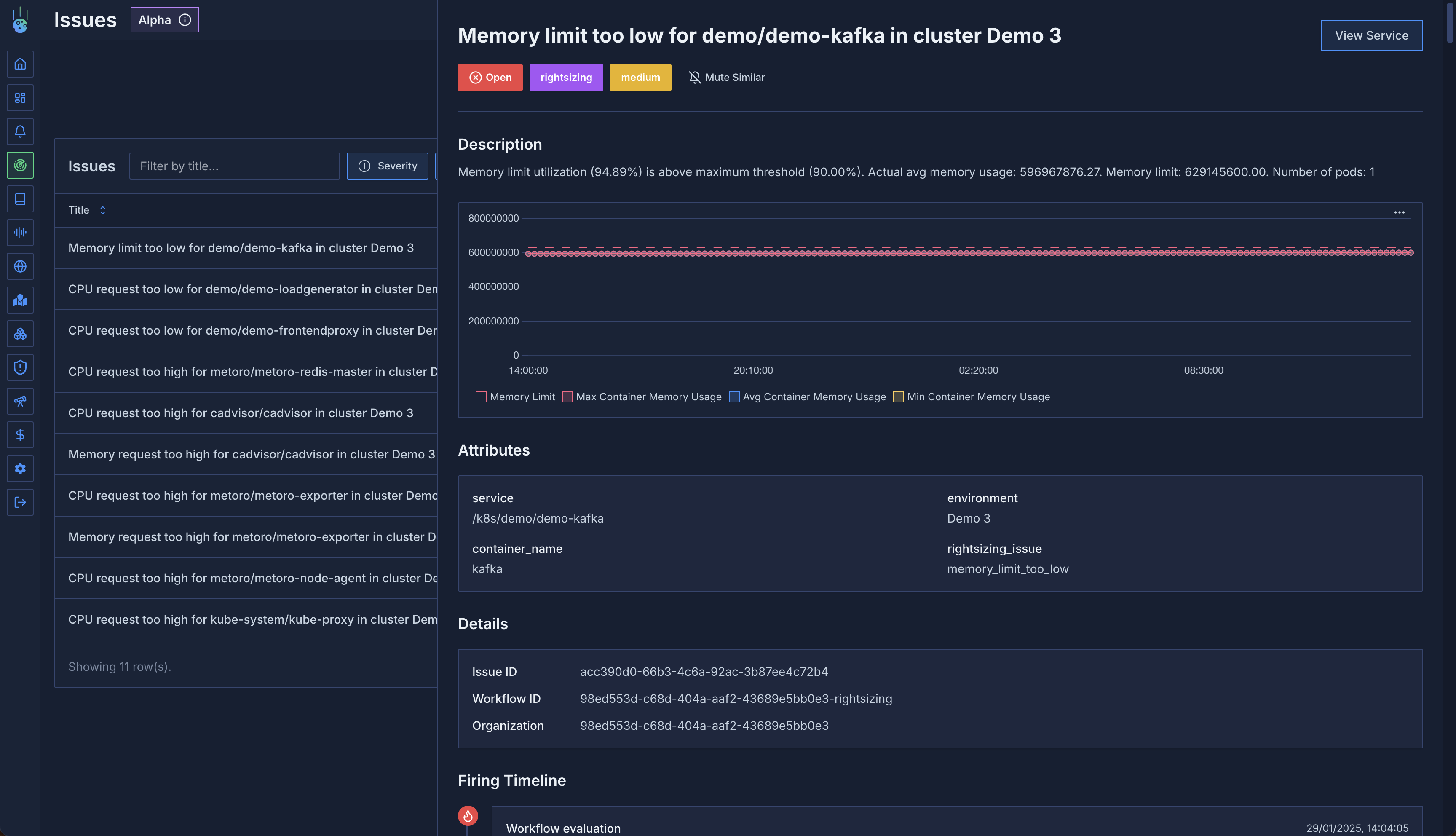

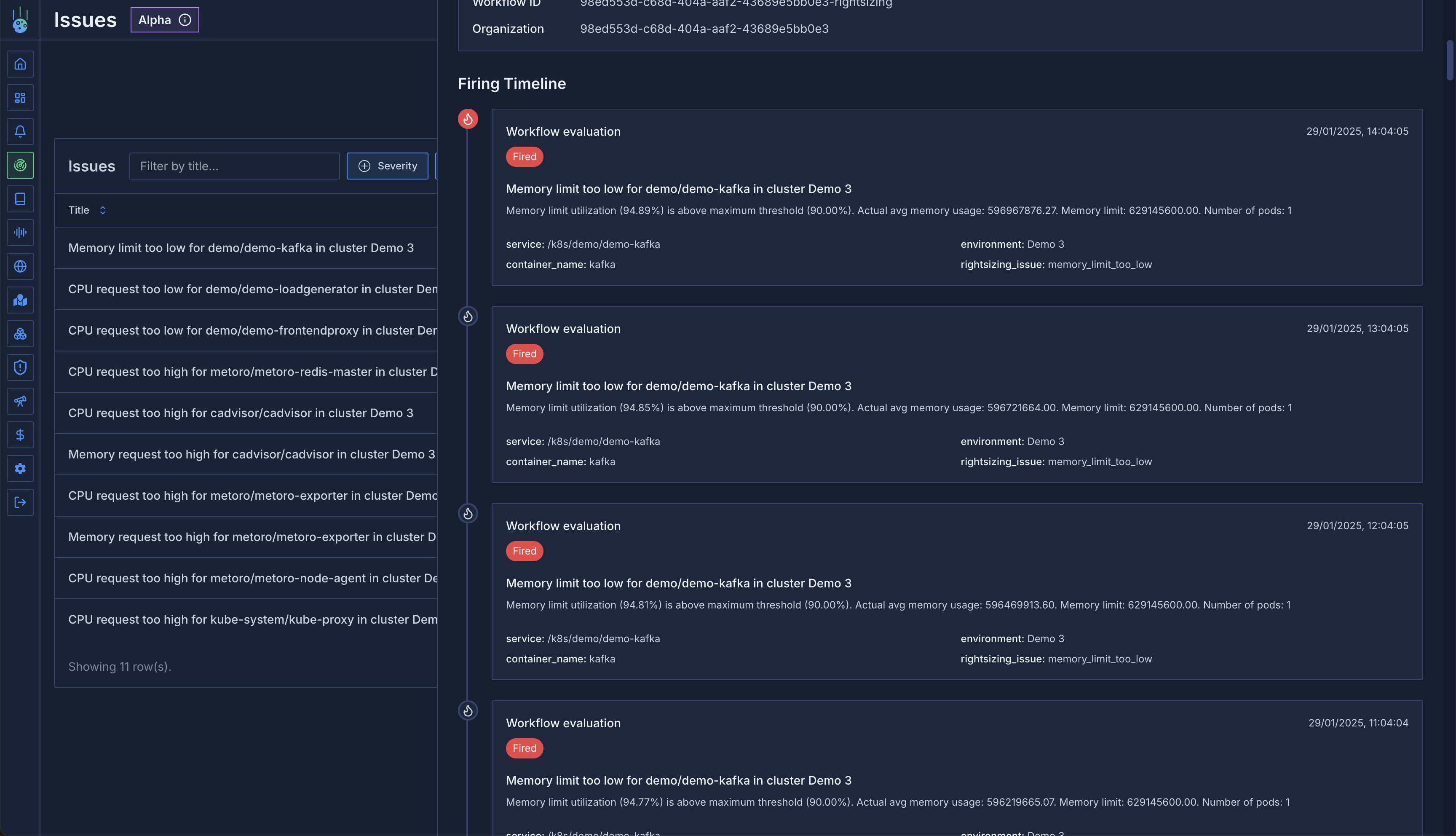

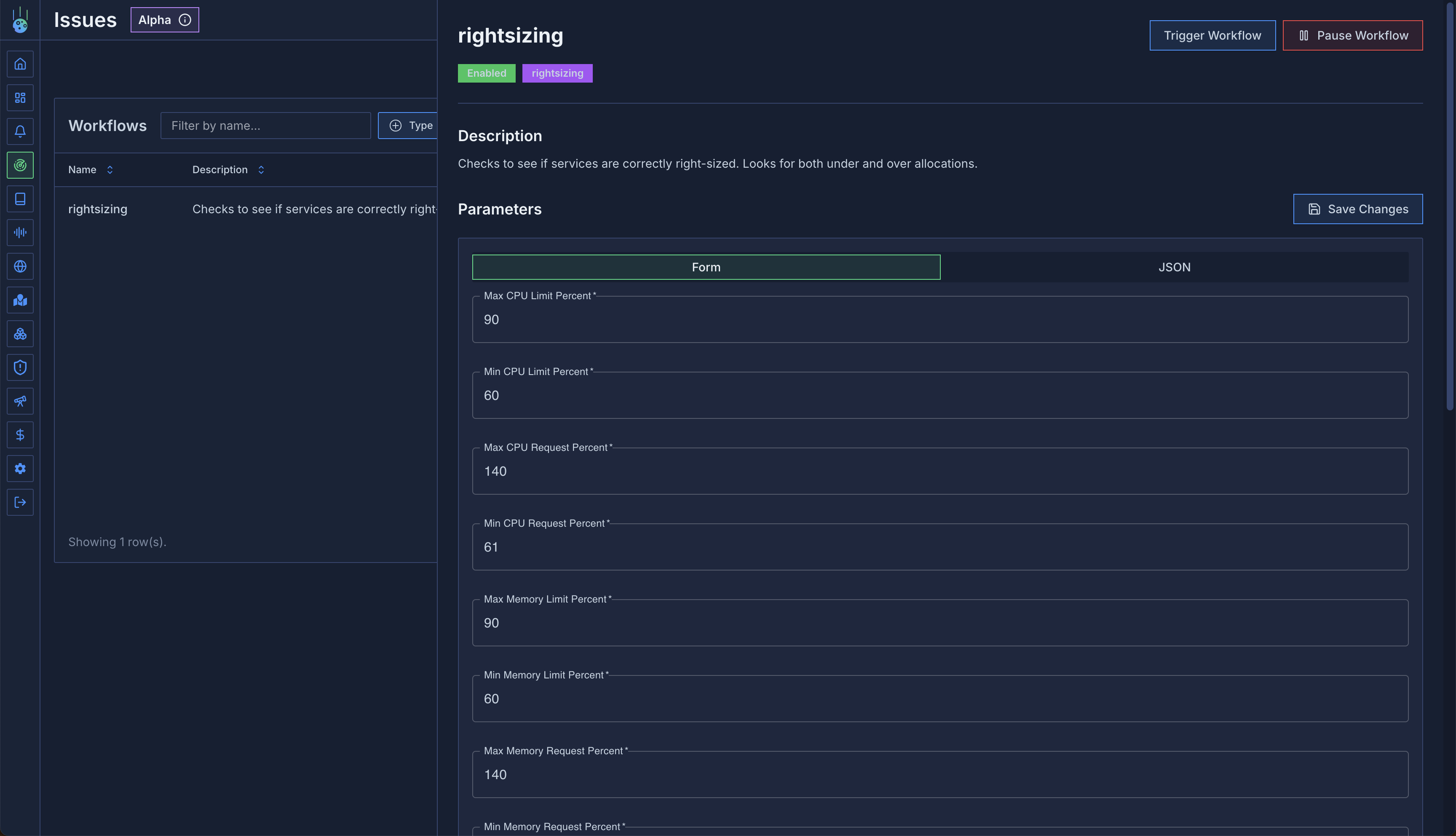

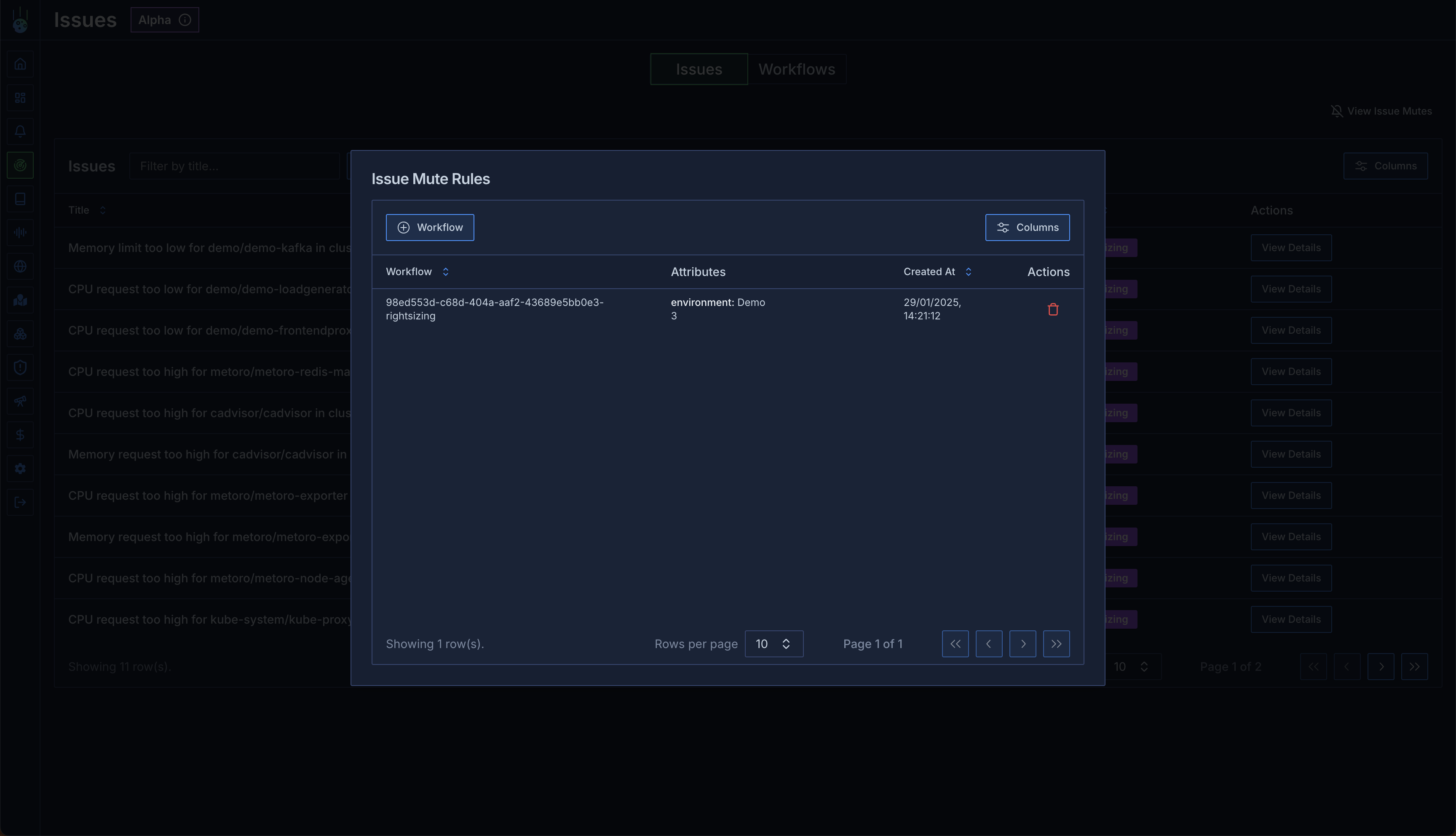

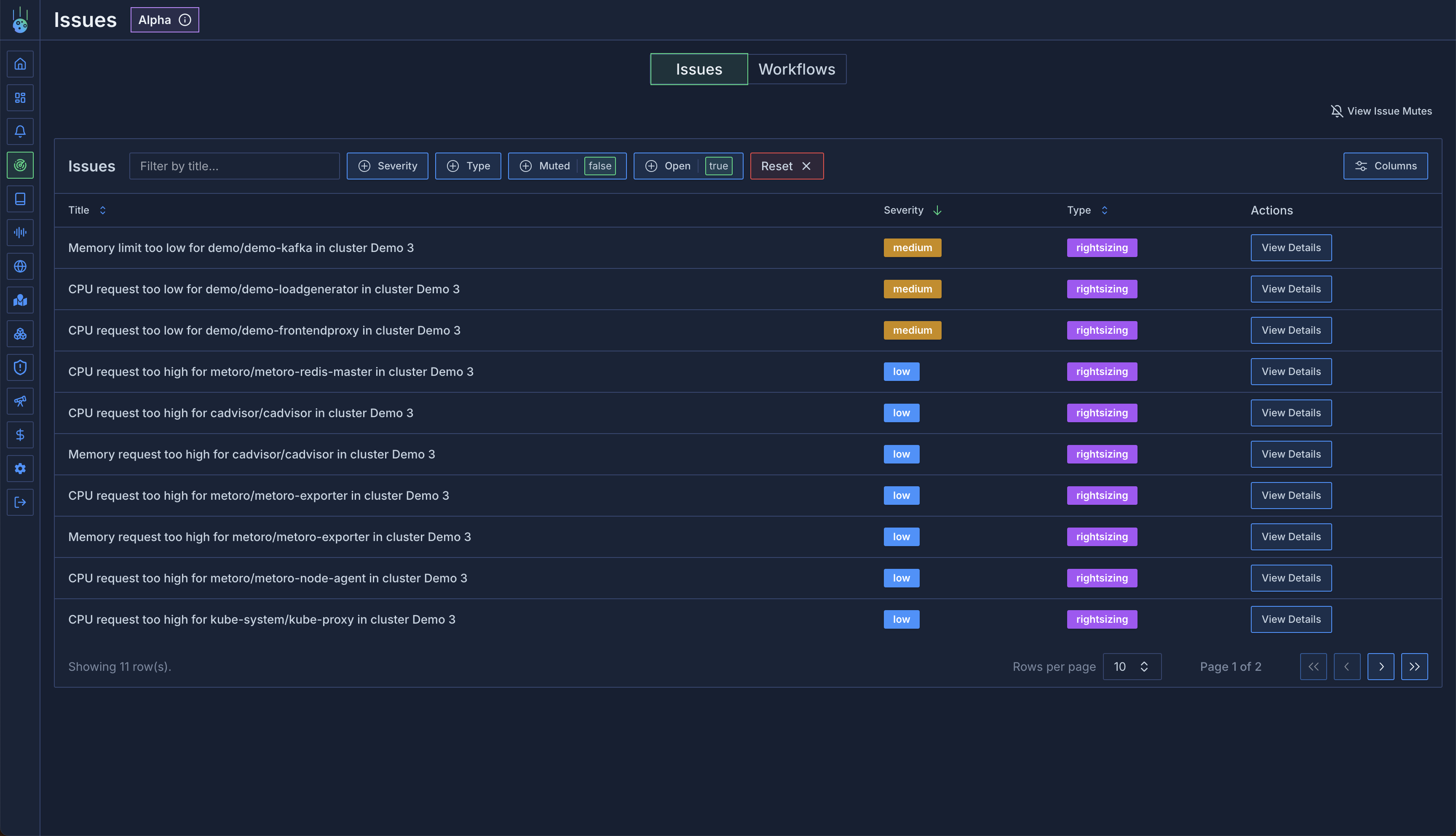

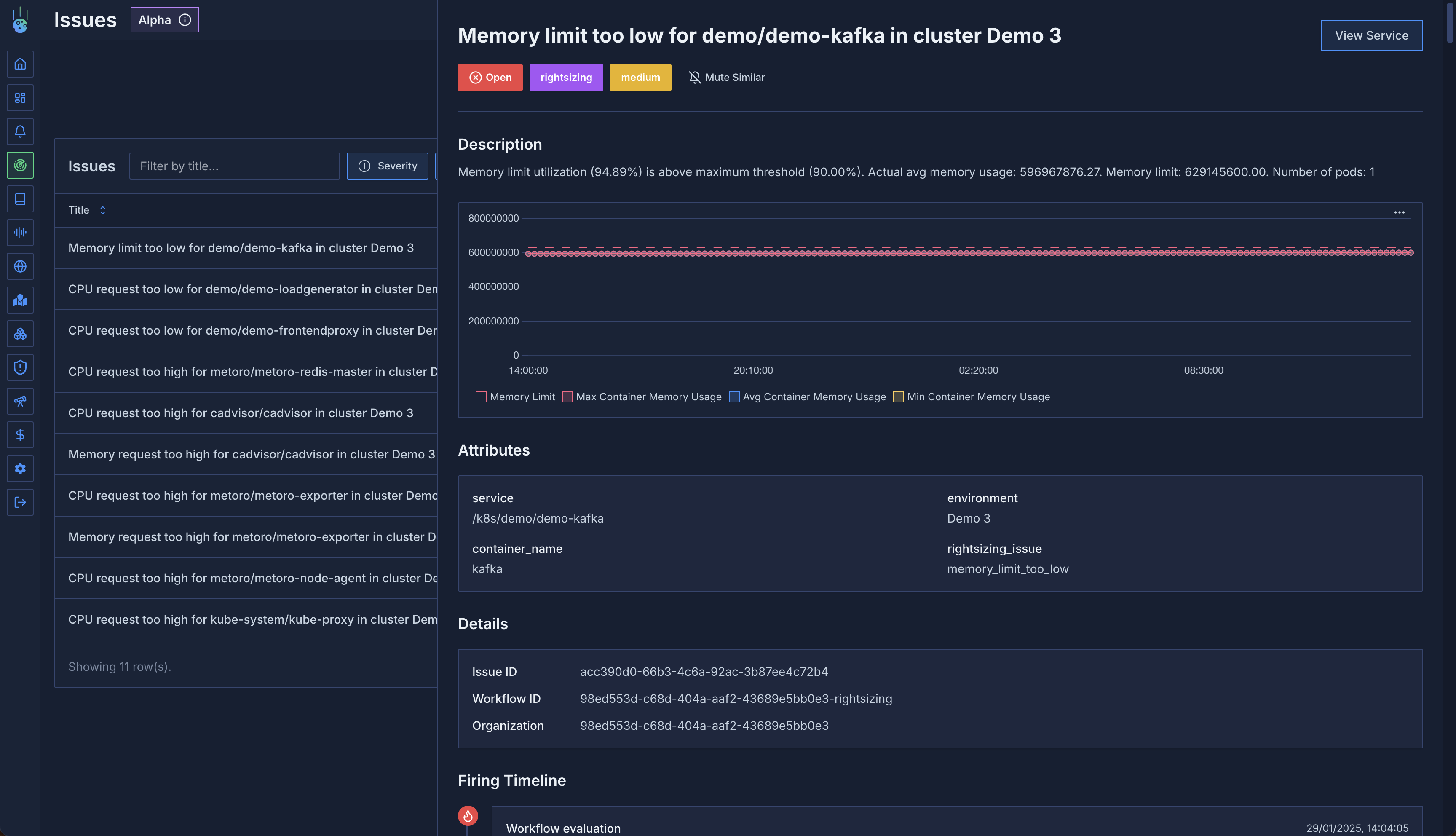

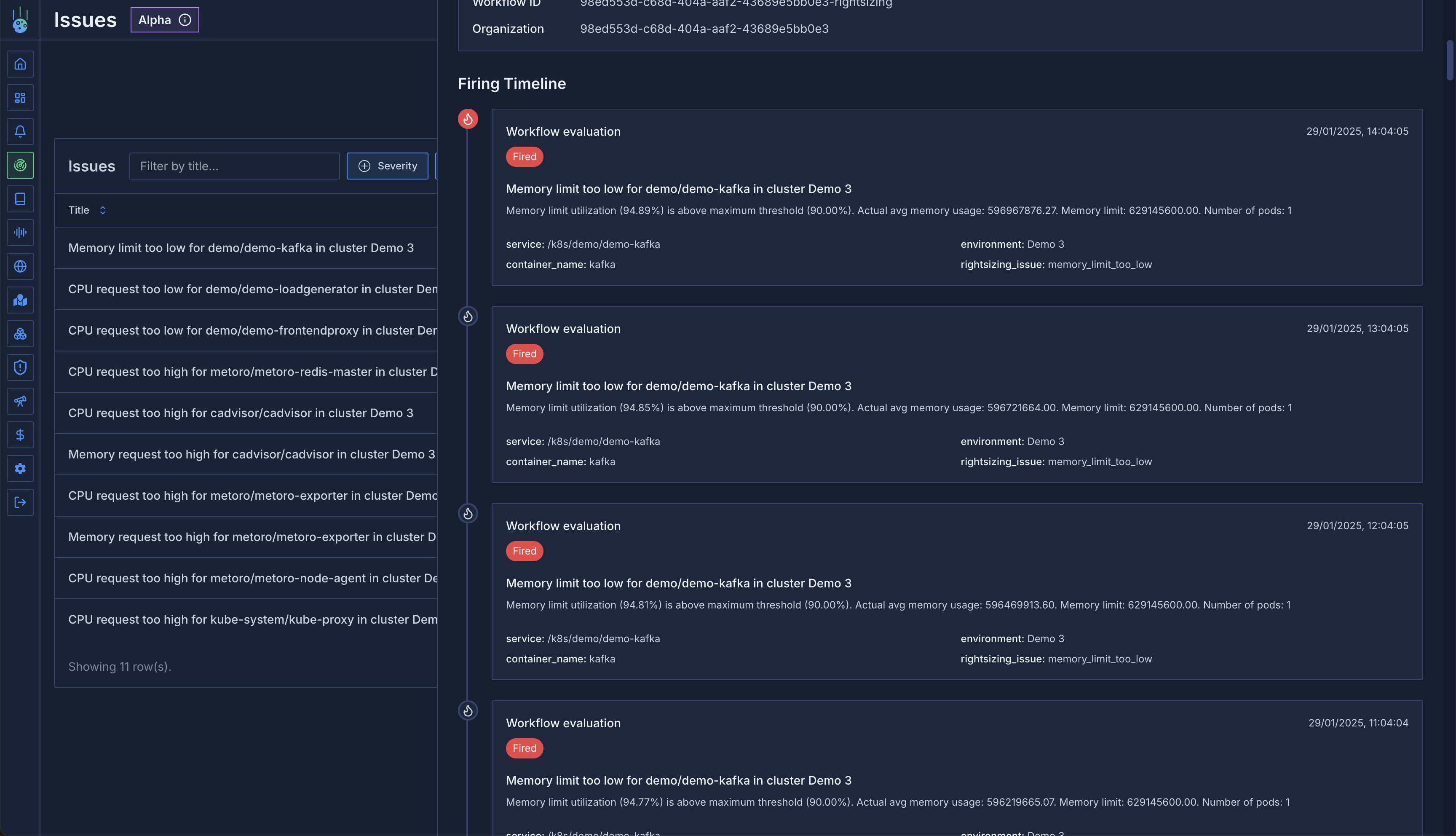

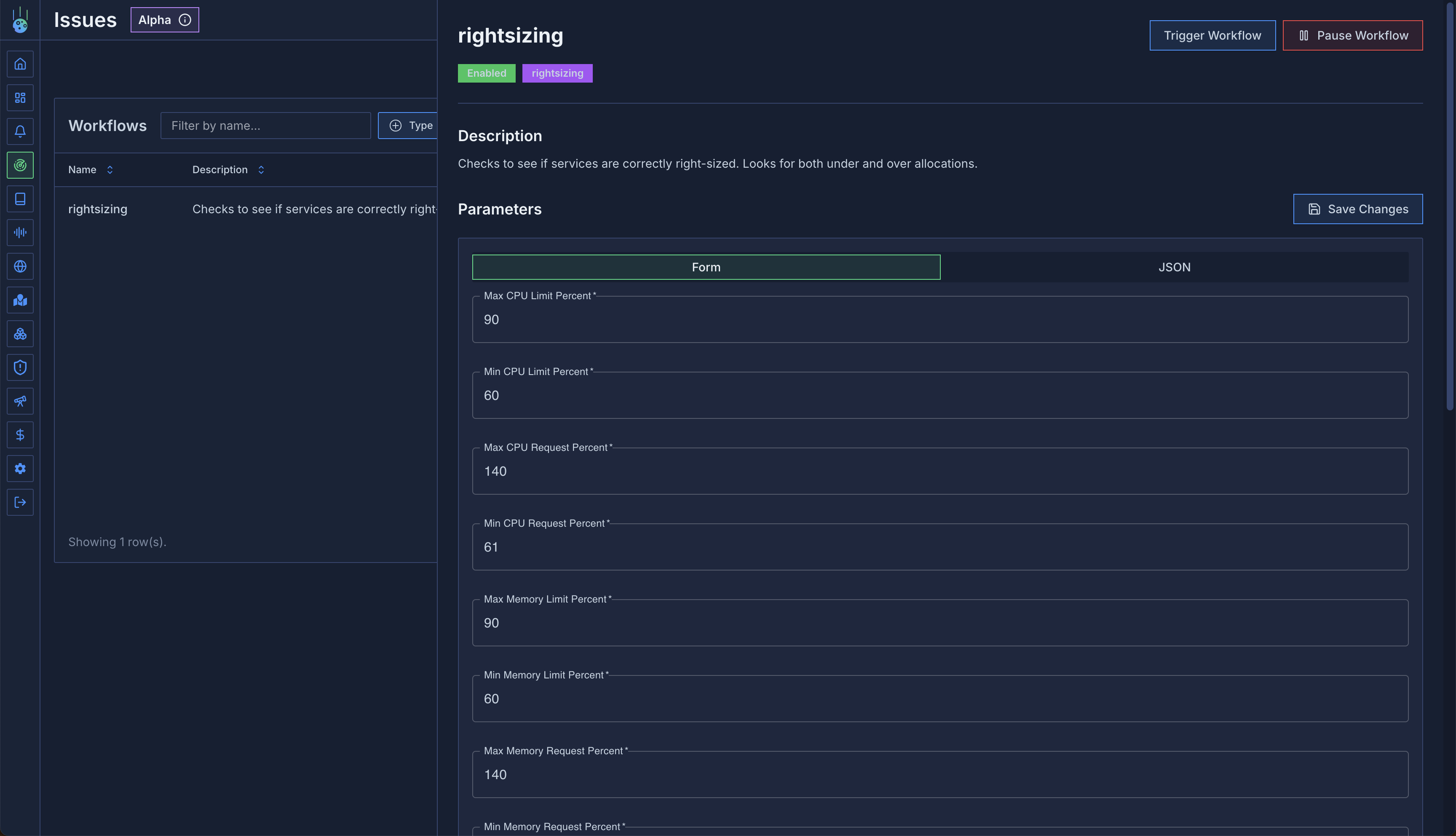

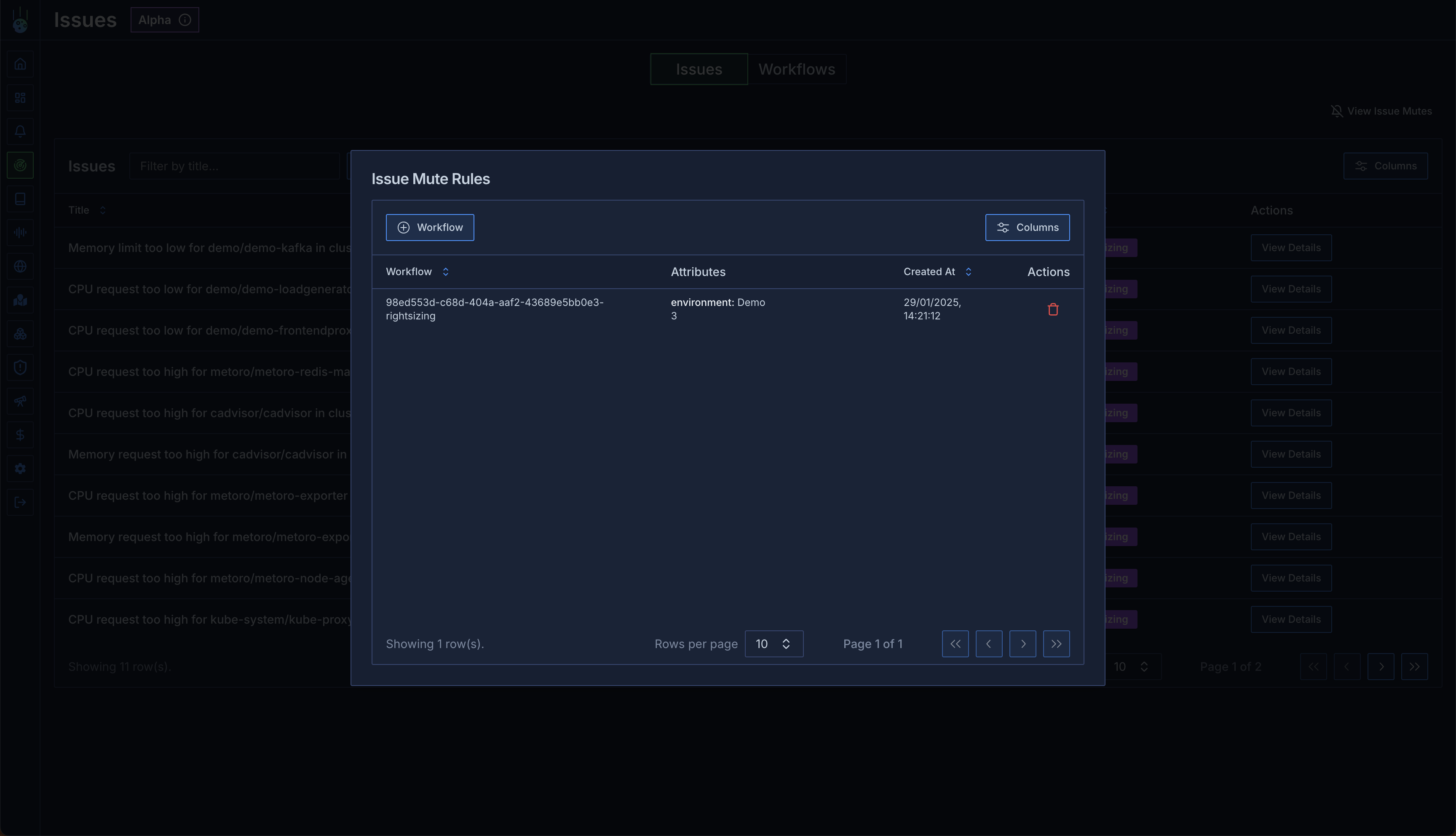

## Issues and Workflows

Introducing automated issue detection and workflow management:

* Intelligent detection of common Kubernetes issues:

* OOM (Out of Memory) events

* CPU throttling incidents

* Resource right-sizing recommendations

* Customizable workflows for issue resolution

* Automated tracking and management of detected issues

* Integration with existing alert channels

* [Learn more about Issue Detection](/issue-detection/overview)

## Dashboard Improvements

* Predefined dashboard templates for common use cases:

* CPU Node Overview

* HTTP Server Metrics

* Network Overview

* Node Overview

* Service Memory Overview

* Alpha support for Grafana dashboard imports

* Enhanced dashboard management:

* Easy template selection

* JSON import/export

* Improved dashboard cloning

## Smarter Alerting, Better Control

Take charge of your alerts like never before! Our revamped alerting system puts you in the driver's seat with more flexibility and precise control.

* Powerful attribute-based alerts that catch exactly what you need

* Fine-tune your thresholds with intuitive controls

* Silence notifications your way with smart muting rules

## Lightning-Fast Metrics Engine

We've turbocharged our metrics pipeline! Get ready for blazing-fast queries and deeper insights into your system's performance.

* Seamless OpenTelemetry integration with remote write support

* Create richer visualizations with multiple metrics per chart

* Lightning-quick queries - now 4x faster than before!

## Dashboard Magic

Your dashboards just got a whole lot smarter! We've packed in powerful features to make your monitoring experience more intuitive and flexible than ever.

* Build sophisticated views with hierarchical variables

* Clone and customize dashboards in seconds

* Set perfect time windows for your metrics

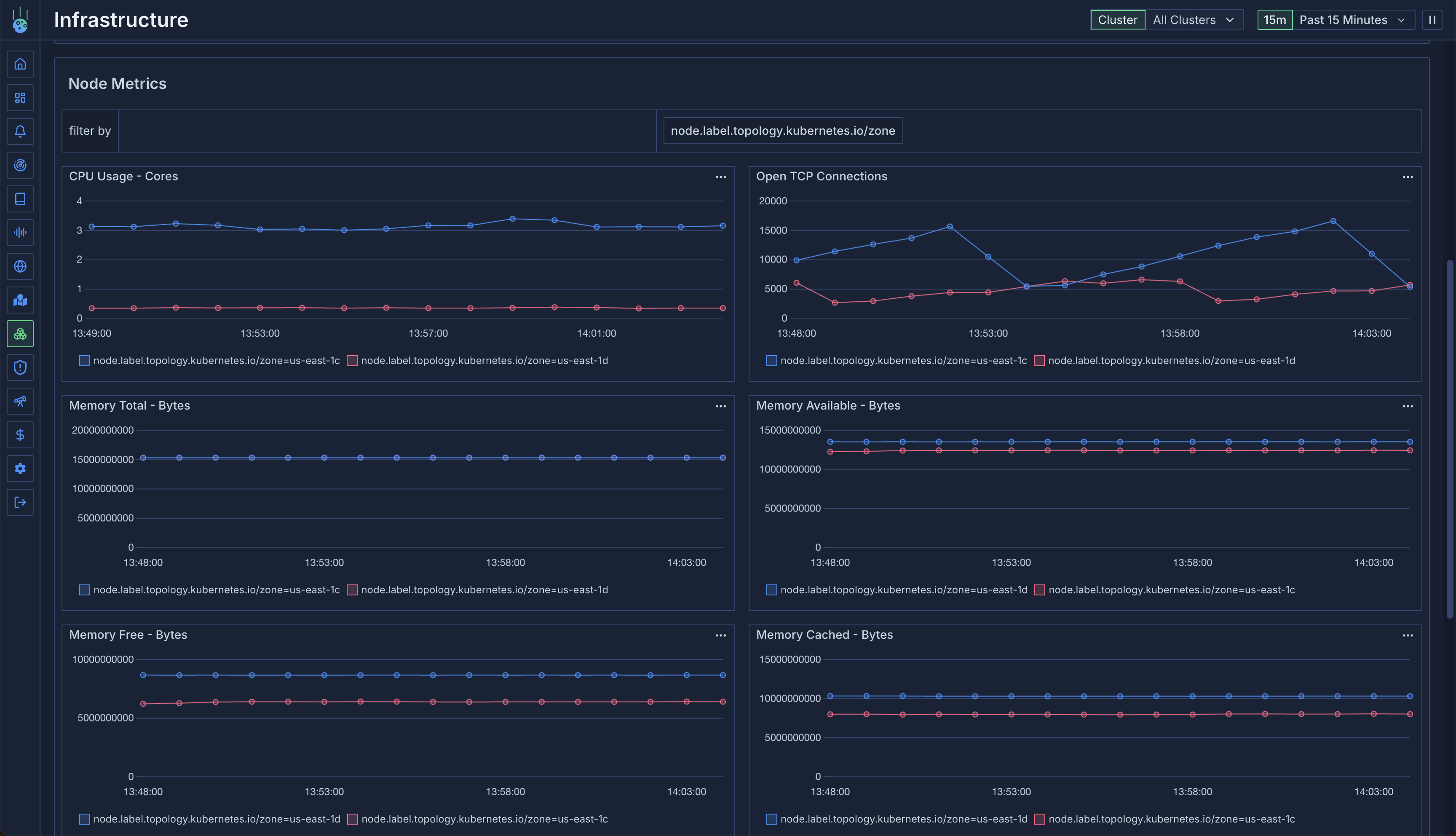

## Infrastructure View Reimagined

Say hello to Infrastructure View 2.0! We've completely rebuilt how you visualize and understand your infrastructure, making it easier than ever to spot trends and troubleshoot issues.

* Track resource utilization with pixel-perfect accuracy

* Understand traffic patterns across availability zones

* Monitor pods and nodes with unprecedented detail

## Smoother, Faster, Better

We've polished every corner of the platform to make your daily monitoring tasks a breeze:

* Share insights instantly with persistent URLs

* Customize your date displays your way

* Navigate namespaces with powerful new controls

## Enhanced Metric Exploration

We've completely revamped how you interact with metrics! The new metric explorer makes it easier than ever to find and analyze the data that matters to you. With dedicated tabs for exploration and catalog browsing, you'll spend less time searching and more time gaining insights.

* Search and filter metrics with lightning speed

* Track request and response sizes with new visualization options

* Full OpenTelemetry metrics support for seamless integration

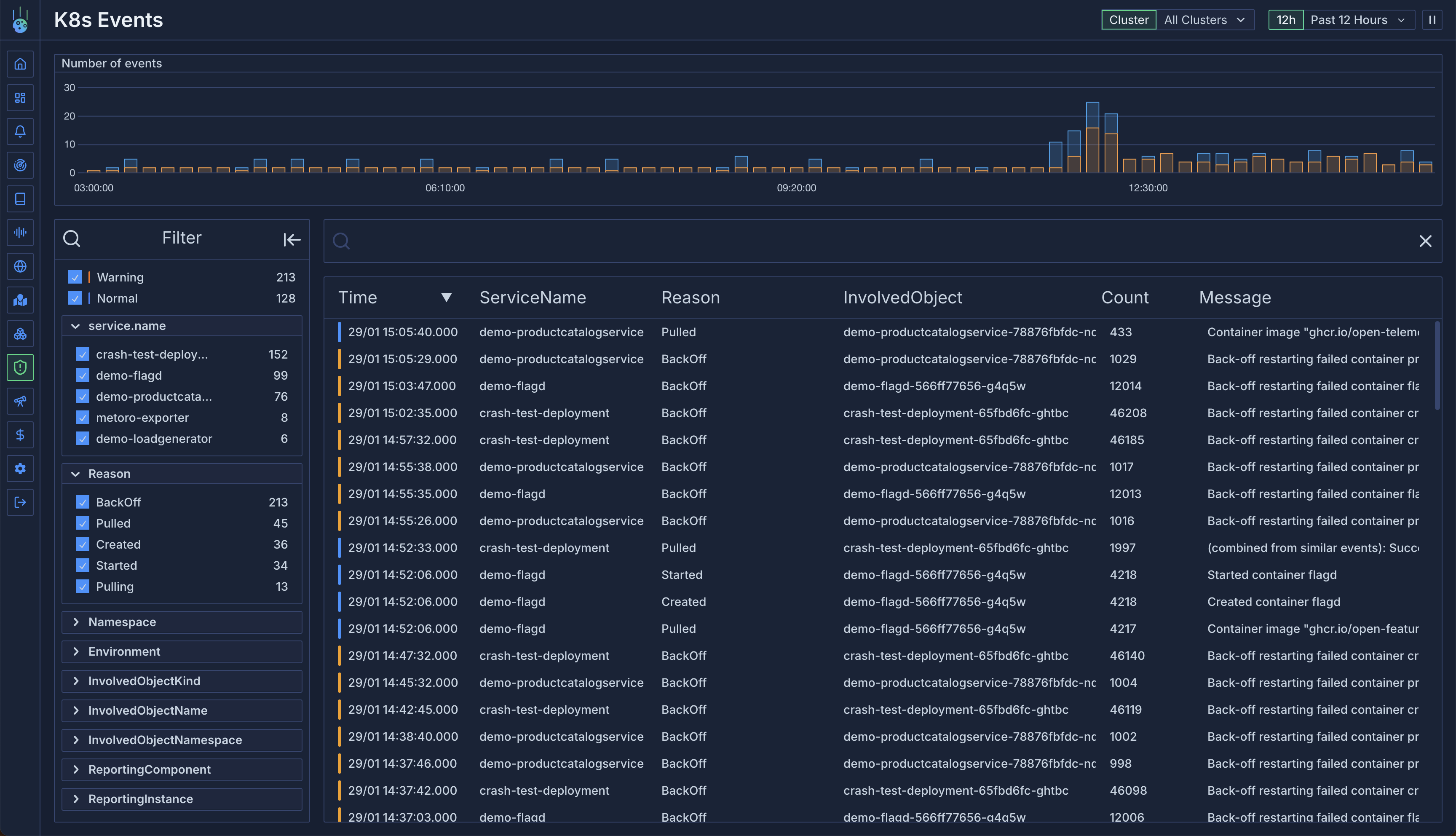

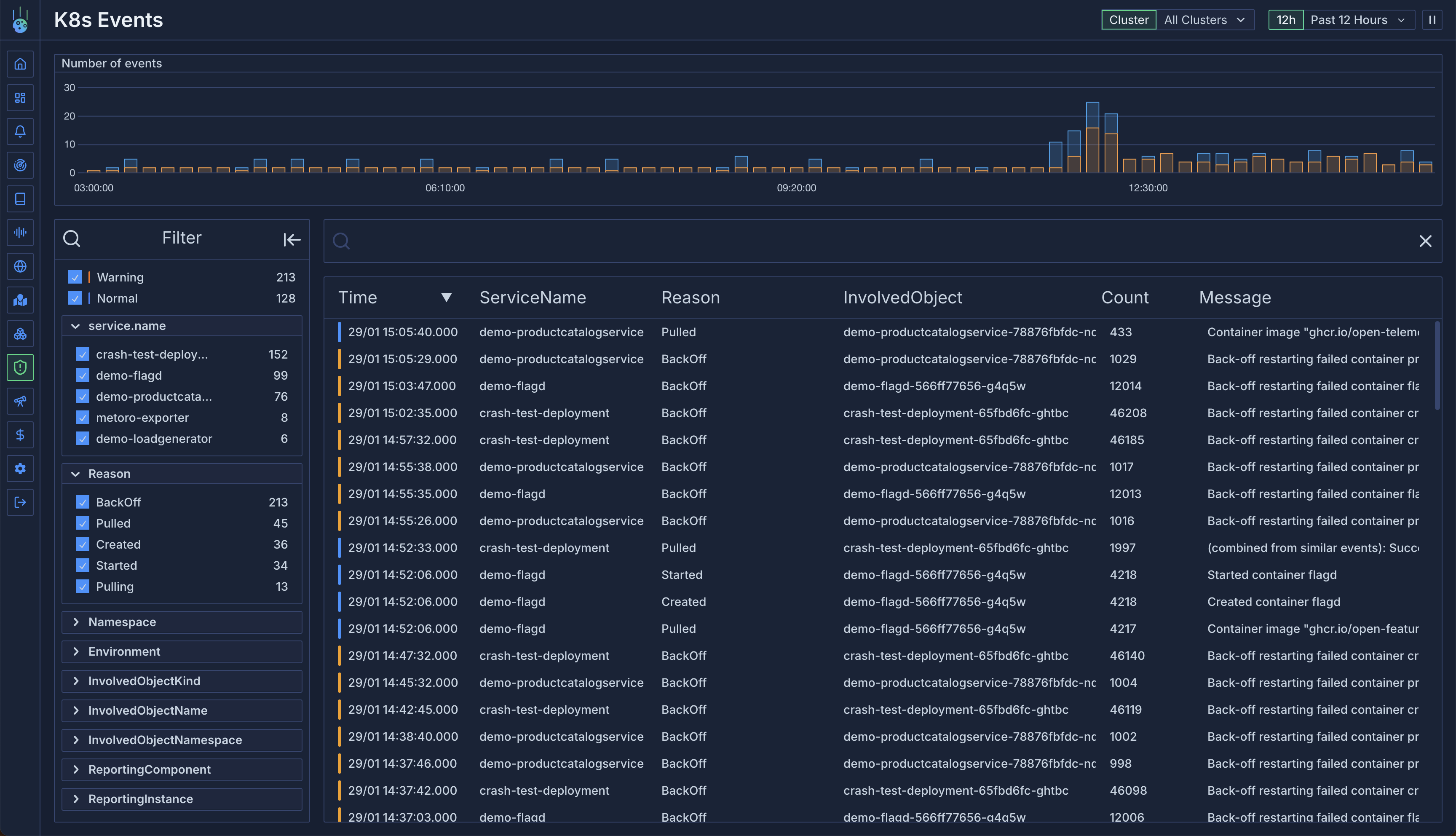

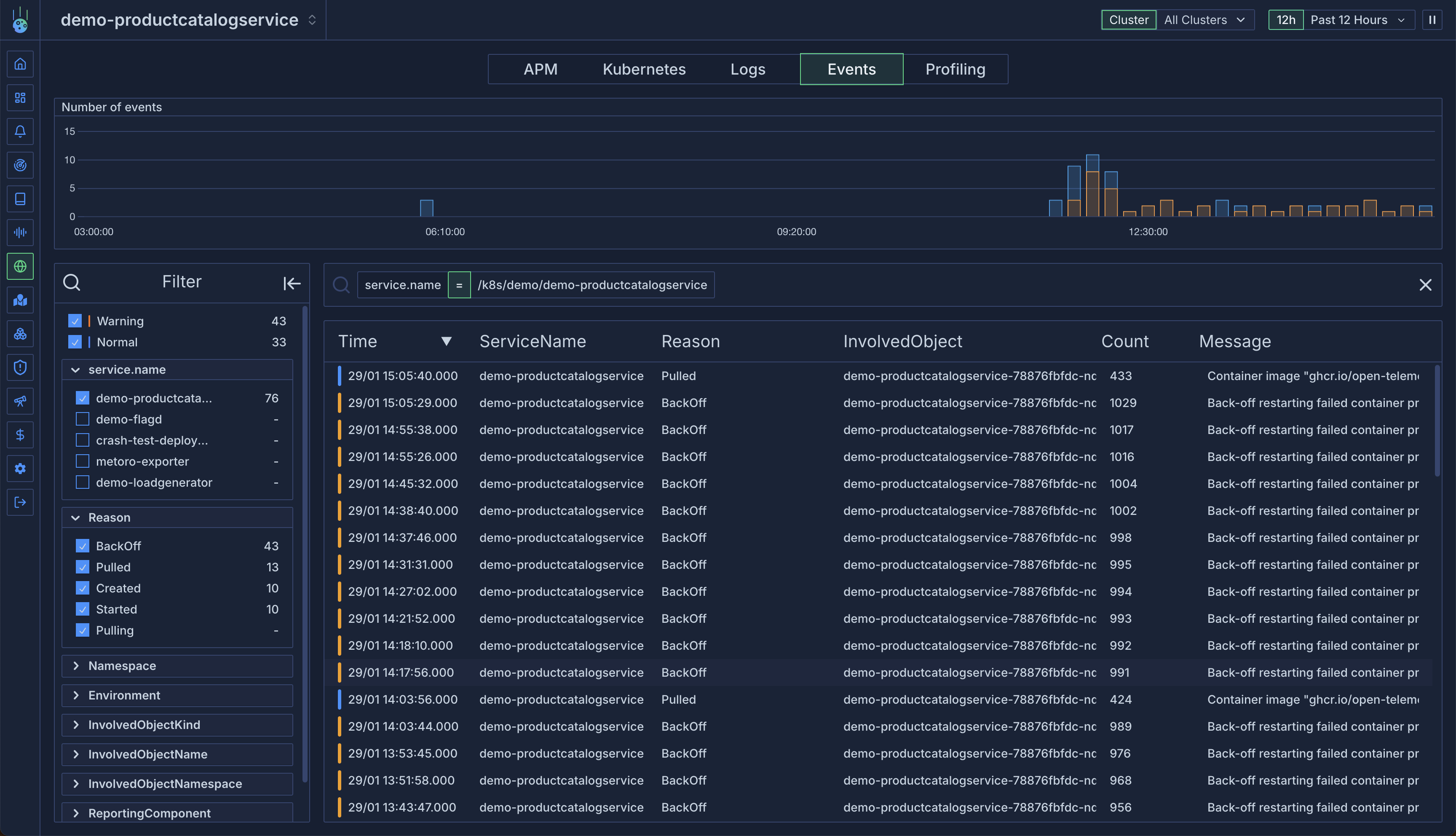

## Smarter Kubernetes Monitoring

Your Kubernetes clusters just got a lot more observable! We've added detailed event tracking and improved availability zone support to give you a complete picture of your infrastructure health.

* Better visibility into cross-zone traffic patterns

* Rich event context for faster debugging

* Improved environment-aware monitoring

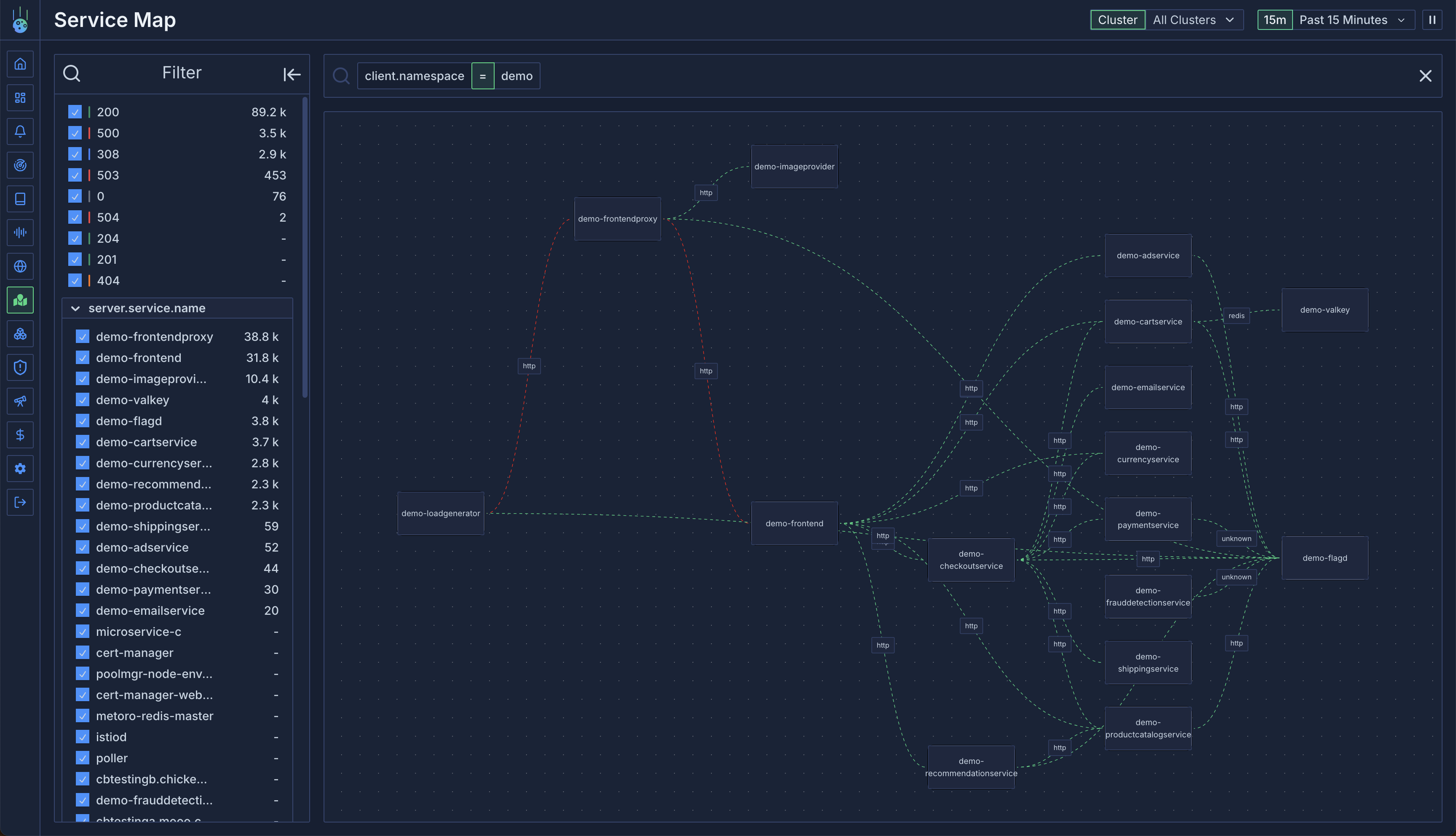

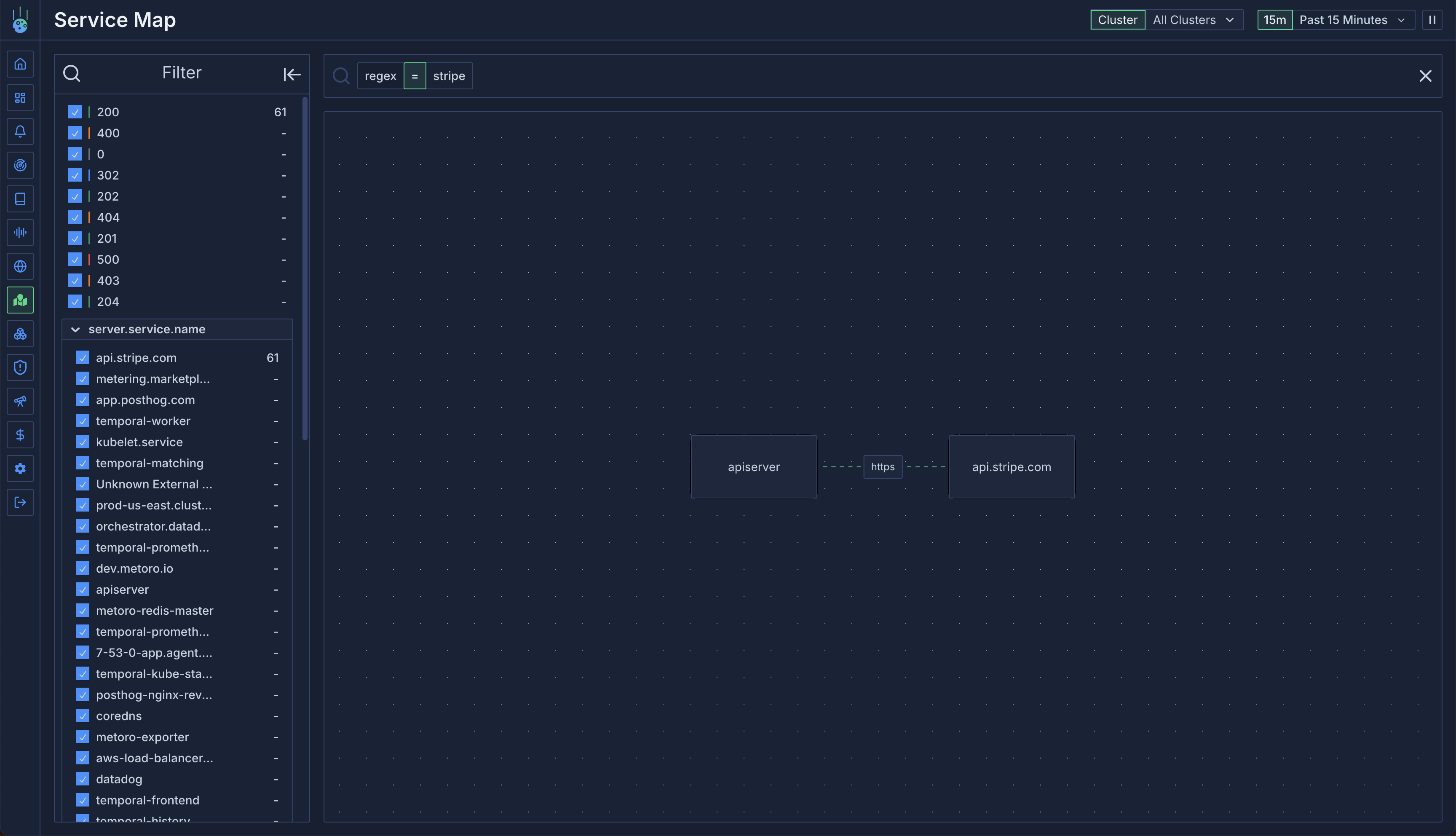

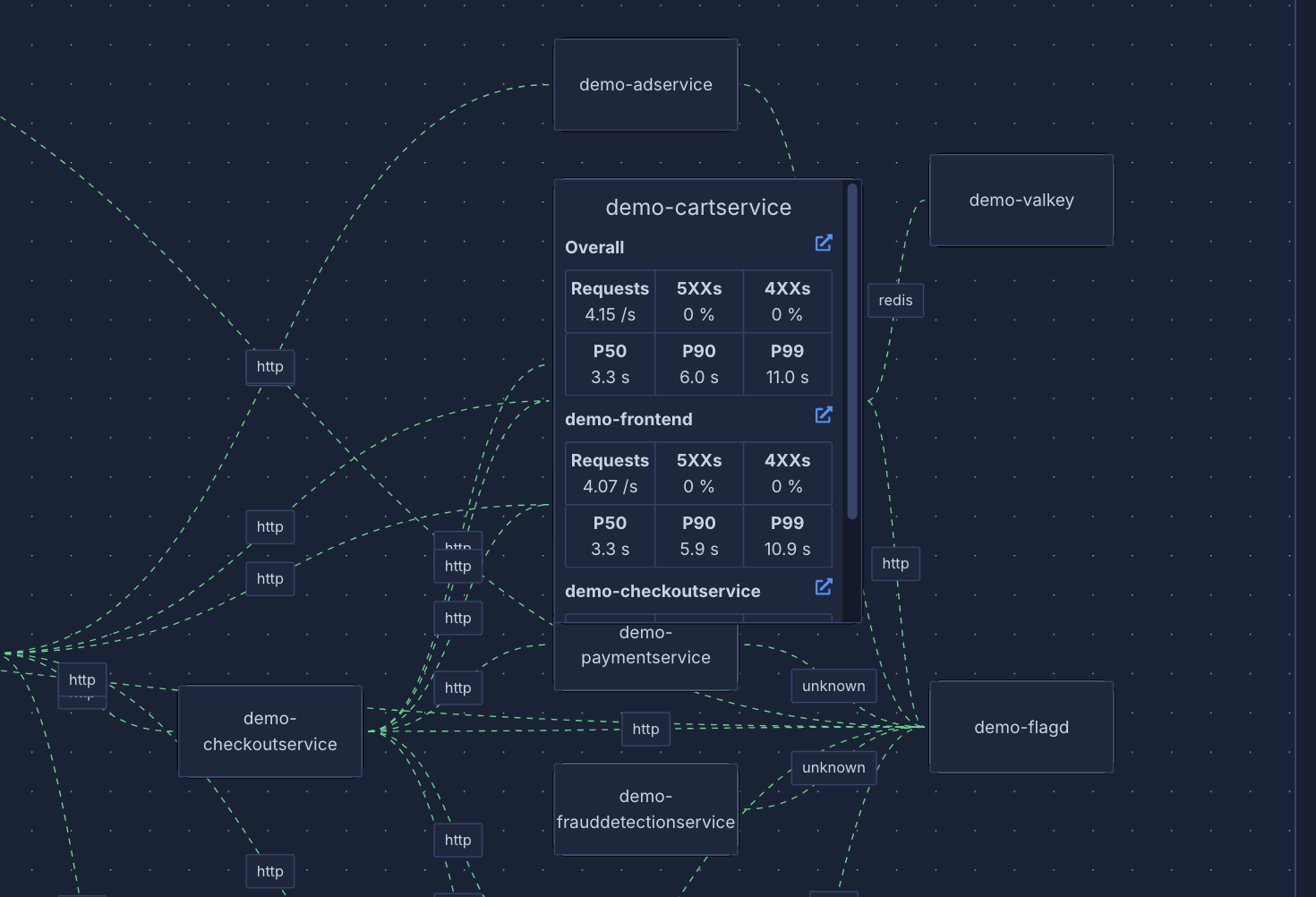

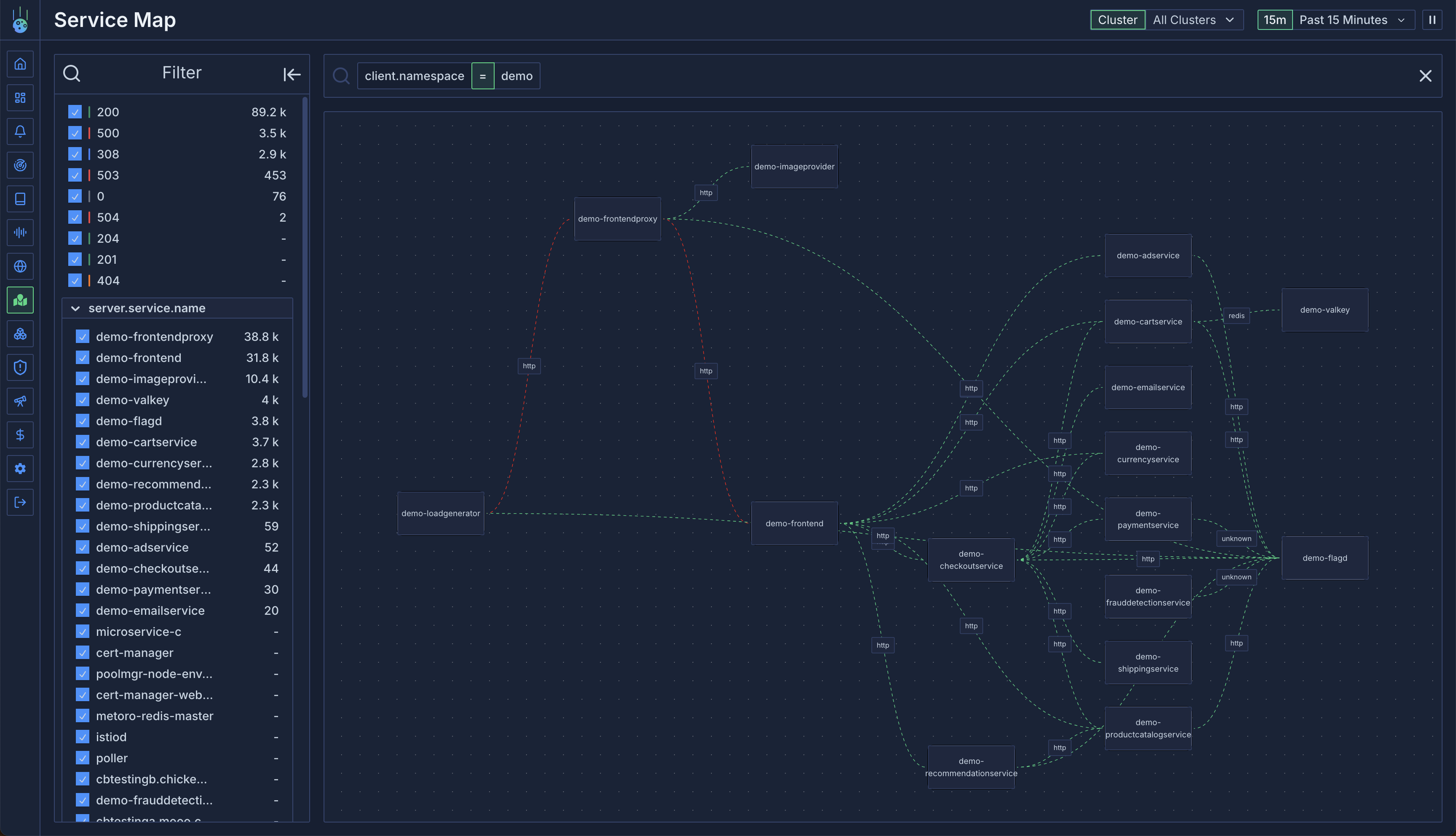

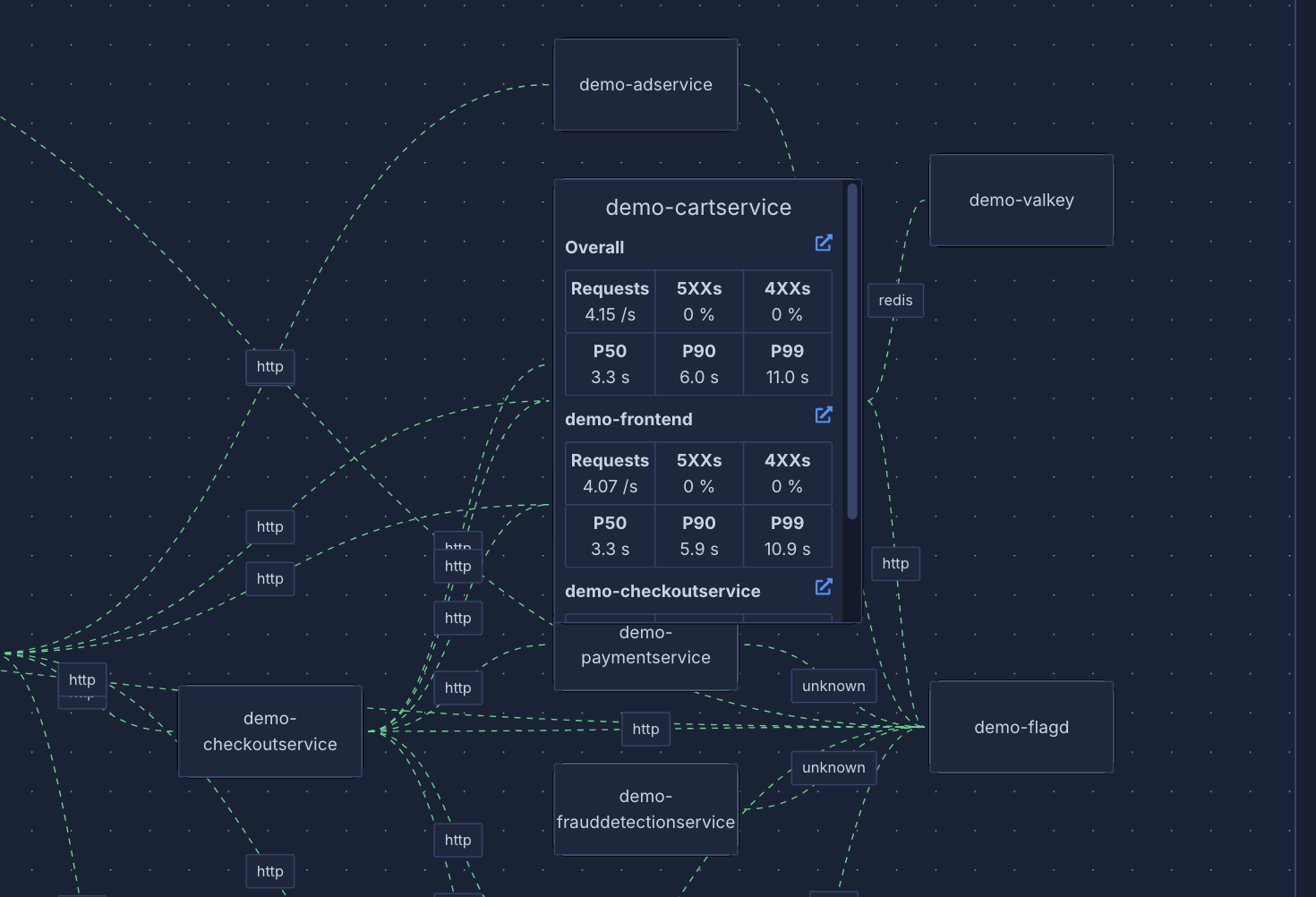

## Service Maps & Infrastructure Insights

Say goodbye to service blindspots! Our new service graph visualization gives you an interactive map of your entire system, making it easy to understand service relationships and dependencies.

* Visual service dependency mapping

* Multi-environment support for complex deployments

* Enhanced pod lifecycle visibility

## Lightning Fast Performance

We've supercharged our backend to handle your observability data faster than ever:

* 4x faster trace queries

* Optimized alert monitoring with reduced latency

* Streamlined Kubernetes data processing

## Proactive Monitoring Suite

Never miss a critical issue again! Our new alerting system watches your traces and metrics to catch problems before they impact your users.

* Set up trace-based alerts for end-to-end monitoring

* Configure metric thresholds with intuitive controls

* Fine-tune alert evaluation periods for your needs

## Smoother User Experience

We've polished the UI to make your daily monitoring tasks more enjoyable:

* Interactive chart legends with smart filtering

* Responsive filters that work as fast as you do

* Enhanced service context in visualizations

## Powerful Filtering & Analysis

Finding the right logs and traces is now easier than ever with our enhanced filtering capabilities:

* Multiple regex support for precise filtering

* Clickable tags for quick context switching

* Smart log ingestion controls

## Enhanced Infrastructure Views

Get deeper insights into your Kubernetes infrastructure with our improved container and pod monitoring:

* Detailed container state tracking

* Rich pod lifecycle visualization

* At-a-glance service health indicators

## Seamless Team Collaboration

Managing your observability team just got easier with our enhanced user management system:

* Quick team invites with secure links

* Streamlined authentication flow

* Flexible user access controls

## Deep Kubernetes Integration

We've expanded our Kubernetes support to give you more visibility than ever:

* Support for all major resource types

* Rich metadata for better context

* One-click navigation to pod details

## Welcome to Metoro! 🎉

We're excited to launch our observability platform, designed to make monitoring your systems a breeze. Our initial release includes everything you need to get started:

* Comprehensive logging and tracing

* Intuitive service catalog

* Essential metrics visualization

* Core Kubernetes integration

* Secure authentication system

We can't wait to see how you'll use Metoro to gain insights into your systems!

# Services

Source: https://metoro.io/docs/concepts/overview

Learn about the core abstraction in Metoro

Metoro generally defined things in terms of Kubernetes constructs.

If you are familiar with Kubernetes, you should be able to understand the majority of what Metoro is showing you.

The one exception to that is the concept of services.

## Services

Services are the core abstraction in Metoro.

They are the entities that represent the persistent components of your microservice application and are the main way you will interact with the data in Metoro.

Services are automatically detected by Metoro and do not require any configuration from you.

### How does Metoro detect a service?

Metoro makes a service out of each of the following Kubernetes resources:

* Deployments

* StatefulSets

* DaemonSets

* ReplicaSets

For example if we have the following architecture in our Kubernetes cluster:

```mermaid

%%{init: {

'theme': 'dark',

'themeVariables': {

'fontFamily': 'Inter',

'primaryColor': '#151F32',

'primaryTextColor': '#EBF1F7',

'primaryBorderColor': '#3793FF',

'lineColor': '#334670',

'secondaryColor': '#151F32',

'tertiaryColor': '#151F32',

'mainBkg': '#151F32',

'nodeBorder': '#3793FF',

'clusterBkg': '#182338',

'titleColor': '#EBF1F7',

'edgeLabelBackground': '#151F32',

'clusterBorder': '#334670'

},

'maxZoom': 2,

'minZoom': 0.5,

'zoom': true

}}%%

graph LR

subgraph Currency Deployment

A[Pod A]

B[Pod B]

end

subgraph Checkout Deployment

C[Pod C]

D[Pod D]

end

```

Metoro will automatically detect 2 services:

* Currency

* Checkout

All of the data around the underlying pods, containers, and the Kubernetes resources themselves are associated with the service they belong to and will be tracked over time by Metoro.

Metoro extracts APM, traces, logs, metrics and profiling data from the pods and containers associated with the service and associates them with the service as well as tracking changes to the kubernetes metadata.

This allows you do do things like see if a new deployment caused a spike in errors or if a new pod is causing a memory leak.

### How do you interact with services?

#### Service Catalog

The best place to get started with services is at the service catalog page. [Check it out here](https://demo.us-east.metoro.io/service-catalog).

The service catalog page shows you all the services that Metoro has detected in your cluster and allows you to drill down into each service to see the data associated with it.

After you find the service you are interested in, you can click on it to see the auto-generated service page.

#### Service Page

The service page shows you in-depth information about the service including:

* APM data

* Kubernetes information like number of replicas, deployment history, etc

* Metrics

* Logs

* Profiling data

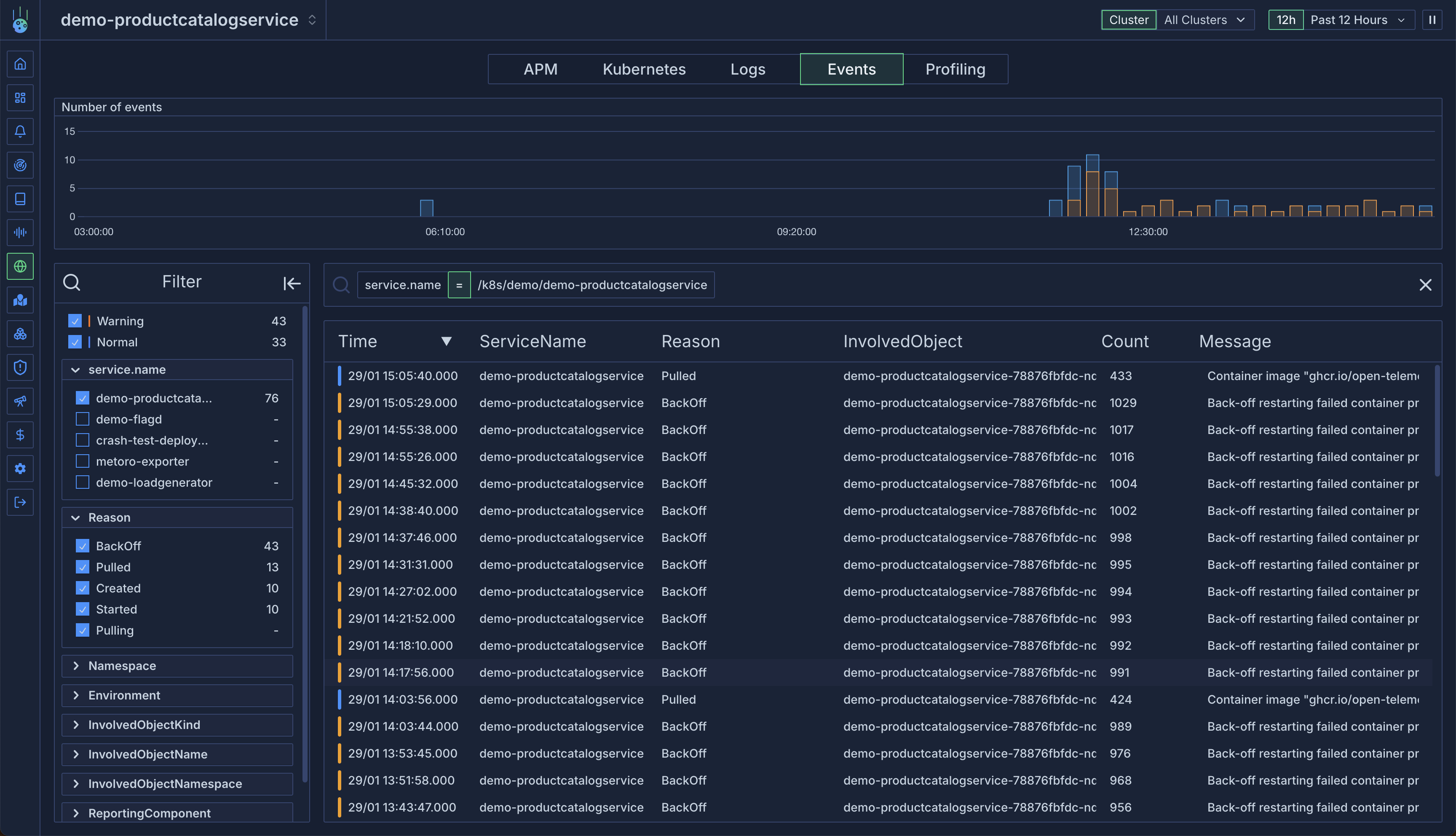

* Kubernetes events associated with the service

The default view is the APM tab. Here you can see autogenerated RED metrics, requests to the service and a service map of the communication between the service and other services in the cluster.

Check out an example [service page](https://demo.us-east.metoro.io/service?service=%2Fk8s%2Fmetoro%2Fmetoro-exporter\&tab=apm\&startEnd=\&podTab=metadata).

Clicking through to the kubernetes tab will show you an overview of service with regards to pods, scaling information and metrics.

You can see all pods associated with the service, drill into them or look at aggregated metrics.

Check out an example [kubernetes page](https://demo.us-east.metoro.io/service?service=%2Fk8s%2Fmetoro%2Fmetoro-exporter\&tab=k8s\&startEnd=\&podTab=metadata\&environment=).

[Logs](/logs) are a great way to see what's going on in your service. Check out an example [logs page](https://demo.us-east.metoro.io/service?service=%2Fk8s%2Fmetoro%2Fmetoro-exporter\&tab=logs\&startEnd=\&excludeFilter=%7B%7D\&filter=%7B%22service.name%22%3A%5B%22%2Fk8s%2Fmetoro%2Fmetoro-exporter%22%5D%7D).

[Events](/kubernetes-resources/kubernetes-events) show all of the kubernetes events which have been emitted which target a resource associated with the service. For example when a pod is being created you will see the event in the events tab. It's a good way to see if any cluster level issues are happening with a service like failed scheduling or pod restarts.

Check out an example [here](https://demo.us-east.metoro.io/service?service=%2Fk8s%2Fmetoro%2Fmetoro-exporter\&tab=events\&startEnd=\&podTab=metrics\&environment=\&last=6h)

Finally the [profiling](/profiling) tab will show you any profiling data collected from the pod and containers associated with the service and aggregate it to show you how much time is being spent in each function across all pods.

Check out an example [profiling page](https://demo.us-east.metoro.io/service?service=%2Fk8s%2Fmetoro%2Fmetoro-exporter\&tab=profiling\&startEnd=\&podTab=metadata\&environment=).

This allows you to see if there are any performance bottlenecks in your service.

# Creating and Editing Dashboards

Source: https://metoro.io/docs/dashboards/creating-editing

## Creating a Dashboard

To create a new dashboard, click on the **Create Dashboard** button on the top right corner of the dashboards view.

## Editing a Dashboard

To edit a dashboard, click on the **Edit** button on the dashboard card.

You can add new charts and groups by clicking the **Add widget** button on the dashboard view.

When creating a new chart, you'll run through the chart creation wizard where you can search for metrics, select aggregations and filters, and customize the chart appearance.

## Using Predefined Dashboard Templates

Metoro provides a set of predefined dashboard templates to help you get started quickly. These templates are designed to cover common monitoring scenarios and can be easily customized to fit your needs.

### Available Templates

* **CPU Node Overview**: Provides detailed insights into CPU usage and performance metrics across your nodes

* **HTTP Server Metrics**: Monitors HTTP server performance, including request rates, latencies, and error rates

* **Network Overview**: Visualizes network traffic, bandwidth usage, and connectivity metrics

* **Node Overview**: Comprehensive view of node health and performance metrics

* **Service Memory Overview**: Tracks memory usage and allocation patterns across your services

### Using Templates

1. Click on the **Create Dashboard** button

2. Select "Use Template" from the creation dialog

3. Choose your desired template from the available options

4. The template will be imported with pre-configured widgets and variables

5. Customize the dashboard as needed for your specific use case

### Importing and Exporting Dashboards

You can also import existing dashboard configurations or export your dashboards as JSON:

* To import: Use the dashboard settings to import a JSON configuration

* To export: Access the dashboard settings and select the export option to download the dashboard configuration as JSON

This feature is particularly useful for sharing dashboard configurations across teams or backing up your dashboard setups.

# Dashboards Overview

Source: https://metoro.io/docs/dashboards/overview

Diagnostic information is often useful in a particular context.

Dashboards are a great way of saving these metrics and grouping them together for easy access when needed.

For example you might want to create a dashboard to show the health of some particularly important services.

In this dashboard you can include charts on metoro-collected data like traces, APM data, log information and container metrics. Then show that side by side with custom metrics emitted from your services.

## Dashboards View

The [dashboards](https://demo.us-east.metoro.io/dashboards) view in Metoro shows all the dashboards that have been created in your organization.

Each dashboard has a unique url that you can share with others, for example, this [test dashboard](https://demo.us-east.metoro.io/dashboard?dashboardId=85301588-eb27-4b68-be36-efa2049a6cf3\&environment=\&startEnd=\&service=).

# Dashboard Variables

Source: https://metoro.io/docs/dashboards/variables

Variables allow you to dynamically apply filters to charts. The values of variables can be constant or can be edited by users when they are looking at a dashboard.

When a user edits the value of a variable in the dashboard, it will not be saved. It will only be applied to the current session.

This allows you to build generic dashboards, for example: a postgres dashboard showing performance metrics for different a particular database. Then users can select the database they're interested in.

## Variable Components

A variables is made up of these components:

* Name - The name of the variable which will be referenced when applying the filter.

* Possible Values - Suggested values for the variable. This is all the values of a particular tag for traces or metrics.

* Default Value - The default value of the variable. This is the value that will be applied when the variable is not edited by the user.

If the value is `*` then it is for all values of the key.

* Overrideable - Whether a user can edit the value of the variable inside the dashboard for their session.

An example variable definition is below:

This variable has a name of `serviceName` and a default value of `*` which means all service names. The possible values are the unique values of the serviceName tag for traces.

## Creating Variables

You can create a variable by clicking the variable icon on any group widget in edit mode.

After a variable is created, you will see it in the header of the group widget.

## Using Variables

After you have created a variable you can use it in the filters of any chart that is nested below that group.

In the chart editor you can set any filter equal to the variable value.

After using a variable in the chart. Change the value of the variable by clicking the variable in the widget and selecting (or typing) the new value.

# Dashboard Widgets

Source: https://metoro.io/docs/dashboards/widgets

A dashboard is composed of widgets arranged on a canvas. There are three types of widgets available:

## Group Widget

The group widget is used to group related widgets together. It is useful for organizing charts and other widgets.

Widgets that are grouped can be moved together as a single entity.

## Chart Widget

The chart widget is used to display any sort of data.

After you add a chart widget you can configure it through the Chart Builder.

A chart is comprised of 7 key elements:

1. The metric type. This is the underlying data that will be queried. It is either [metric](/metrics/overview) or [trace](/traces/overview)

2. The chart type. This defines the visualisation. Either a bar or line chart.

3. The metric name. This is the name of the metric that will be displayed. This is only relevant for metric charts.

4. Filters. Filters consist of a number of key value pairs. This will restrict the queried data to only data points matching the filters. Each filter is ANDed with every other filter. Individual filters can be ORed together by inserting `||` between each value.

5. Aggregation. This is the operation to apply to the data. For example, if you want to sum the data you would select `sum`. The available aggregations for metrics are:

* `sum`

* `avg`

* `min`

* `max`

The available aggregations for traces are:

* `count`

* `request size`

* `response size`

* `total size`

* `p50 latency`

* `p90 latency`

* `p95 latency`

* `p99 latency`

6. Groups. This is a list of keys that will be used to group the data.

7. Functions. Functions are mathematical operations that can be applied to the data. For example, if you want to calculate the monotonic difference of the data you would select `monotonic_diff`. The available functions are:

* `monotonic_diff` - The difference between the current value and the previous value in the timeseries. If the difference is negative, it will be clamped at 0.

* `value_diff` - The difference between the current value and the previous value in the timeseries.

* `custom_math_expression` - An arbitrary math expression applied to the time series. The timeseries is passed as the parameter `a` to the expression. For example, if you want to divide the data by 2 you would select `a / 2`.

## Markdown Widget

The markdown widget is used to display arbitrary markdown. For example if you have a dashboard corresponding to a run book for a particular type of incident you can use the markdown widget to display the run book and keep it up to date with the actual dashboard.

The markdown widget supports any markdown that is compatible with the [CommonMark](https://commonmark.org/) format. A cheat sheet can be found [here](https://commonmark.org/help)

# Metoro Architecture

Source: https://metoro.io/docs/getting-started/architecture

Metoro has two main components: the in-cluster agents and the observability backend.

At a very high level, the flow of data in Metoro is as follows:

1. The node agents are responsible for collecting data from the linux kernel of all nodes in the Kubernetes cluster and writing to cluster local storage.

2. The cluster exporter then reads the data from the local storage aggregating across all nodes and sends it to the Metoro backend ingesters along with the Kubernetes metadata (pods, deployments, configmaps, etc).

3. The ingesters write all observability data to the long-term backend storage: currently clickhouse.

4. The api server reads data from the backend storage and serves it to the frontend and any api clients.

The following diagram shows the high level architecture of Metoro:

```mermaid

%%{init: {

'theme': 'dark',

'themeVariables': {

'fontFamily': 'Inter',

'primaryColor': '#151F32',

'primaryTextColor': '#EBF1F7',

'primaryBorderColor': '#3793FF',

'lineColor': '#334670',

'secondaryColor': '#151F32',

'tertiaryColor': '#151F32',

'mainBkg': '#151F32',

'nodeBorder': '#3793FF',

'clusterBkg': '#182338',

'titleColor': '#EBF1F7',

'edgeLabelBackground': '#151F32',

'clusterBorder': '#334670'

},

'maxZoom': 2,

'minZoom': 0.5,

'zoom': true

}}%%

flowchart LR

%% ---- Clients (outside both subgraphs) ----

A[Clients]

%% ---- "Metro - Cloud or On-Prem" subgraph ----

subgraph "Metro - Cloud or On-Prem"

B["Metro API Server"]

C["Observability Data Storage - ClickHouse"]

D["Metadata / Workflow Storage - Postgres"]

E["Temporal Workers - Alerts / Job Running"]

F["Ingesters"]

B --> C

B --> D

B --> E

F --> C

end

%% Connect Clients to Metro API Server (outside subgraph so Clients remain outside)

A --> B

%% ---- "Kubernetes Cluster" subgraph ----

subgraph "Kubernetes Cluster"

subgraph "Kubernetes Nodes"

subgraph "Linux Kernel"

Z["System Calls"]

Z1["eBPF Programs"]

end

G["Microservice Container"]

H["Microservice Container"]

I["Microservice Container"]

J["Metoro Node Agent"]

%% Microservices talk to the Linux Kernel

G --> Z

H --> Z

I --> Z

%% Metoro Agent reads from the Kernel

Z --> Z1

Z1 --> J

end

K["Metoro Exporter"]

L["Kubernetes API Server"]

M["Redis (temporary metadata storage)"]

%% Metoro Agent passes data to the Metoro Exporter

J --> K

K --> F

L --> K

K --> M

J --> M

end

style A fill:#182338,stroke:#3793FF,color:#EBF1F7

style B fill:#182338,stroke:#3793FF,color:#EBF1F7

style C fill:#182338,stroke:#3793FF,color:#EBF1F7

style D fill:#182338,stroke:#3793FF,color:#EBF1F7

style E fill:#182338,stroke:#3793FF,color:#EBF1F7

style F fill:#182338,stroke:#3793FF,color:#EBF1F7

style G fill:#182338,stroke:#3793FF,color:#EBF1F7

style H fill:#182338,stroke:#3793FF,color:#EBF1F7

style I fill:#182338,stroke:#3793FF,color:#EBF1F7

style J fill:#182338,stroke:#3793FF,color:#EBF1F7

style K fill:#182338,stroke:#3793FF,color:#EBF1F7

style L fill:#182338,stroke:#3793FF,color:#EBF1F7

style M fill:#182338,stroke:#3793FF,color:#EBF1F7

style Z fill:#182338,stroke:#3793FF,color:#EBF1F7

```

# Getting Started

Source: https://metoro.io/docs/getting-started/getting-started

Get started with Metoro in under 5 minutes

Metoro offers two ways to set up and use the platform:

1. **Managed Cloud**: Let us handle everything for you. We host and maintain the platform. You just need to install the Metoro Agent on your cluster.

2. **On-Prem**: Host and manage Metoro entirely within your infrastructure for full control over your data and setup.

## Compatibility

Metoro can run on any Kubernetes cluster, whether it is on-premises, in the cloud, or managed by a cloud provider.

Metoro explicitly supports the following Kubernetes distributions for production use:

* AWS Elastic Kubernetes Service (EKS)

* Google Kubernetes Engine (GKE) without GKE autopilot

* Azure Kubernetes Service (AKS)

* Bare-metal on-prem Kubernetes installations

* OpenShift clusters

## Installation (Managed Cloud)

Metoro is designed to be super easy to get up and running with. We think that it should take \< 5 minutes to get end-to-end observability.

Start your timer and let's get started!

1. Head to the [Metoro Webapp](https://us-east.metoro.io/) and sign up with your email address.

2. After you log in, follow the on-screen instructions to install Metoro on your cluster.

You will be prompted to select a Kubernetes cluster for the Metoro Agent installation. You have two options:

* **Existing Cluster**: This option allows you to install Metoro on an existing Kubernetes cluster that is already running, whether in the cloud, on-premises, or elsewhere.

Select this option if you are setting up Metoro for an existing environment.

* **Local Dev Cluster**: This option sets up a local kubernetes cluster on your machine and installs Metoro into it. Choose this if you are starting a new project or simply trying out Metoro without installing it into an existing cluster.

3. Once you have selected your cluster, copy-paste the installation command into your terminal and hit enter. Make sure that your kubernetes context is set to the cluster you want to install Metoro into.

4. Once the installation is complete, you will see a success message in your terminal.

It can take a couple of minutes for Metoro to receive your cluster's data.

* If you are installing Metoro to a local dev cluster, this might take 5-10 minutes as it sets up the cluster.

* If you are installing Metoro to an existing cluster, this will take around a minute.

5. Once Metoro shows that it is receiving data, you can start exploring your cluster. You now have end-to-end telemetry. You should see the following screen:

## Installation (On-Prem)

Check out our [on-premises installation guide](/administration/on-premises) for more information.

# Introduction

Source: https://metoro.io/docs/getting-started/introduction

Welcome to Metoro - The Kubernetes Native Observability Platform

Metoro is an observability platform specifically designed for Kubernetes. With a single helm chart installation, you get complete observability for your clusters.

Automatic zero instrumentation traces for all container requests with support for HTTP(s), Kafka, NATS,

RabbitMQ, Redis, and more powered by eBPF. Bring your own custom traces with OpenTelemetry.

Every log from every container stored in a single place. Automatic structured JSON log parsing with a billion

logs per second search. Bring your own custom logs with OpenTelemetry.

Detailed metrics collection and visualization for all pods, containers and hosts out of the box. Bring your own

custom metrics with OpenTelemetry.

Zero instrumentation ebpf powered on-cpu profiling for all containers. See cpu time down to the function level. Full support for C, C++, Python, Golang, Rust.

Every change to every resource, stored and indexed in a single place. A complete history of your cluster's state

with derived metrics.

Understand and visualize every dependency and network path. No instrumentation required.

Alert on anything in platform, including metrics, logs, traces, and Kubernetes resources.

Auto generated APM for every service. RED metrics, profiling, incoming and outgoing traces and much more.

Automated issue detection and cost optimization recommendations

All of this in a sub 5 minute install, we promise.

Head to the onboarding documentation to get started.

# System Requirements

Source: https://metoro.io/docs/getting-started/requirements

This page outlines the system requirements for running various Metoro components in your environment.

There are three main components which run in your Kubernetes cluster:

1. **The node agent** - Runs on each node in your Kubernetes cluster

2. **The exporter** - Runs multiple replicas depending on load, scales with horizontal pod autoscaler

3. **The redis cache** - One replica per cluster

Each component has specific resource requirements as detailed below. All requirements are approximate and assume a relatively up to date cpu. Testing is perfomed on AWS M5 instances running on a Skylake 8175M.

The node agents resource requirements scale with several factors, primarily:

* The number of requests being made to / from the node agent

* The number of logs being collected

At a minimum, each node agent requires:

* 0.05 cores

* 100Mi memory

For each additional:

* 1000 requests/second: +0.2 cores CPU, +0.2GB memory, +0.1MB/s network

* 1000 logs/second: +0.05 cores CPU, +0.1GB memory, +0.1MB/s network

### Resource Scaling Table

| Requests per second | Logs per second | CPU (cores) | Memory (GB) | Network (MB/s) |

| ------------------- | --------------- | ----------- | ----------- | -------------- |

| 1000 | 1000 | 0.25 | 0.3 | 0.2 |

| 2000 | 2000 | 0.5 | 0.6 | 0.4 |

| 3000 | 3000 | 0.75 | 0.9 | 0.6 |

| 4000 | 4000 | 1.0 | 1.2 | 0.8 |

| 5000 | 5000 | 1.25 | 1.5 | 1.0 |

These requirements are approximate and may vary based on:

* Number of containers being monitored

* Types of metrics being collected

* Frequency of metric collection

* Network conditions

* Size of individual log lines

The resources required mainly scale with the number of traces / logs being collected across all nodes.

For each additional:

* 1000 traces/second: +0.05 cores CPU, +0.1GB memory, +0.1MB/s network

* 1000 logs/second: +0.02 cores CPU, +0.1GB memory, +0.1MB/s network

### Resource Scaling Table

| Traces per second | Logs per second | CPU (cores) | Memory (GB) | Network (MB/s) |

| ----------------- | --------------- | ----------- | ----------- | -------------- |

| 1000 | 1000 | 0.07 | 0.2 | 0.2 |

| 2000 | 2000 | 0.14 | 0.4 | 0.4 |

| 3000 | 3000 | 0.21 | 0.6 | 0.6 |

| 4000 | 4000 | 0.28 | 0.8 | 0.8 |

| 5000 | 5000 | 0.35 | 1.0 | 1.0 |

These requirements are approximate and may vary based on:

* Number of containers being monitored

* Types of metrics being collected

* Frequency of metric collection

* Network conditions

* Size of individual log lines

Redis is used for temporary storage and caching of metrics data.

Redis has minimal resource requirements:

* 0.05 cores CPU

* 20Mi memory

***

### Example Cluster Configuration

For a cluster with:

* 100 nodes

* 1000 requests per second per node

* 1000 logs per second per node

The resources required are:

* **100 node agents**: 0.25 cores, 0.3GB memory, 0.2MB/s network per node

* **1 cluster exporter**: Processing 100k traces/second, 100k logs/second across all nodes

* Total: 7 cores, 20GB memory, 20MB/s network (split across N replicas)

* **1 redis cache**: 50m cores, 20Mi memory

**Total cluster resources required**

* 32 cores CPU

* 50GB memory

* 40MB/s network

## Default Resource Requests

The default resource requests for the node-agent are:

* 0.3 cores, 300Mi memory

The default resource requests for the exporter are:

* 1 core, 2GB memory

You should tune these requests to meet your specific needs.

# Infrastructure View

Source: https://metoro.io/docs/infrastructure/overview

Monitor and analyze your Kubernetes cluster nodes and their resources

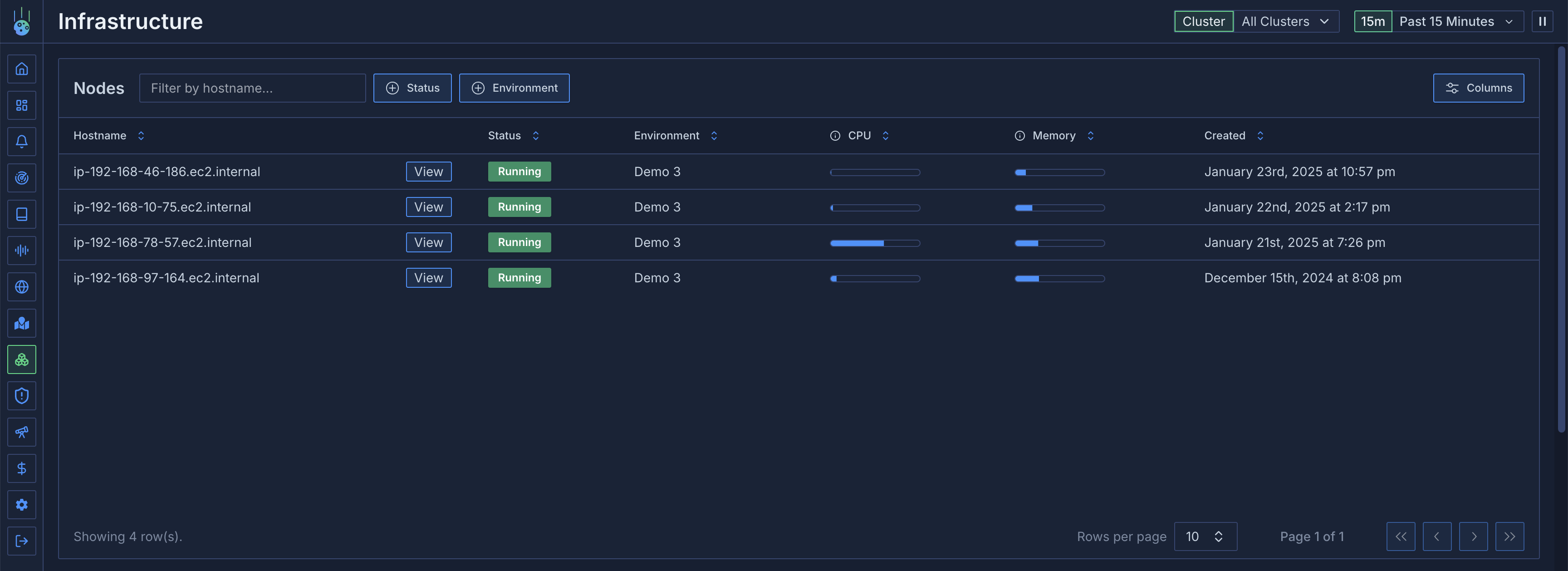

## Overview

The Infrastructure view provides comprehensive monitoring and analysis of all nodes across your Kubernetes clusters. It offers detailed insights into node resources, pod distributions, and system metrics.

## Node Overview

### Node Table

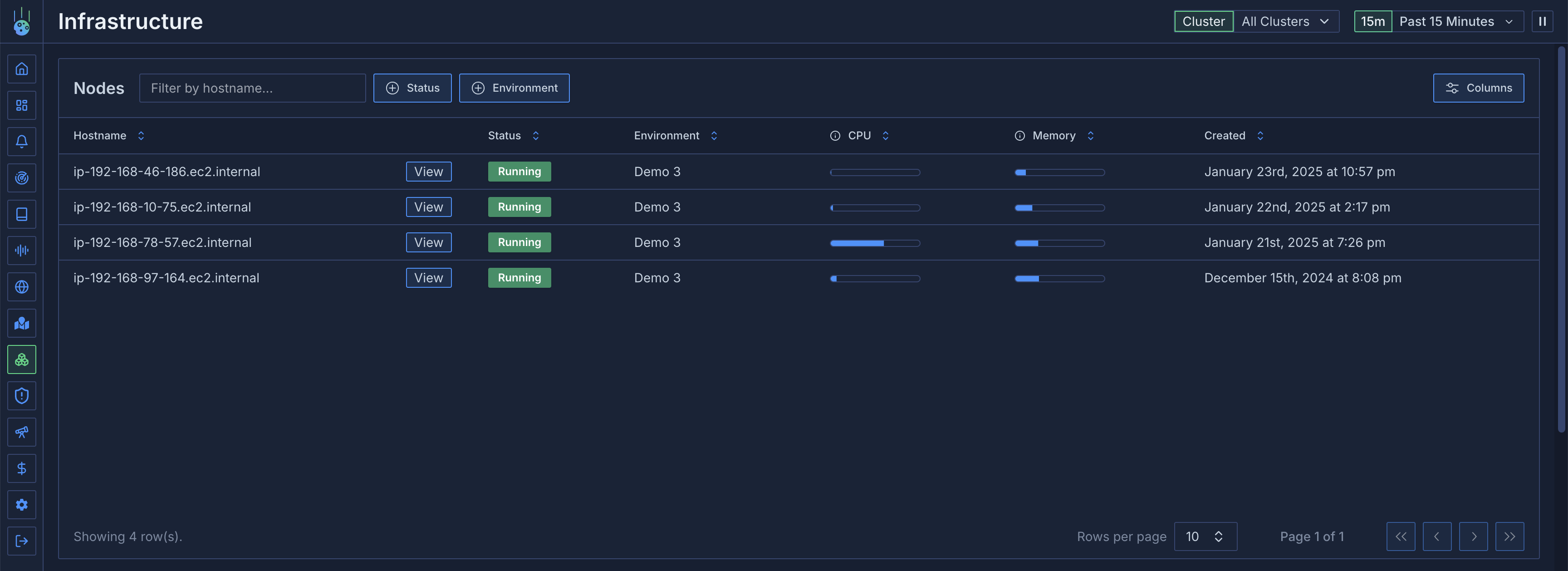

The top section displays a table of all nodes with key information:

* Node names (searchable)

* CPU utilization over selected time period

* Memory usage trends

* Filterable by various attributes

Metoro is an observability platform specifically designed for Kubernetes. With a single helm chart installation, you get complete observability for your clusters.

Automatic zero instrumentation traces for all container requests with support for HTTP(s), Kafka, NATS,

RabbitMQ, Redis, and more powered by eBPF. Bring your own custom traces with OpenTelemetry.

Every log from every container stored in a single place. Automatic structured JSON log parsing with a billion

logs per second search. Bring your own custom logs with OpenTelemetry.

Detailed metrics collection and visualization for all pods, containers and hosts out of the box. Bring your own

custom metrics with OpenTelemetry.

Zero instrumentation ebpf powered on-cpu profiling for all containers. See cpu time down to the function level. Full support for C, C++, Python, Golang, Rust.

Every change to every resource, stored and indexed in a single place. A complete history of your cluster's state

with derived metrics.

Understand and visualize every dependency and network path. No instrumentation required.

Alert on anything in platform, including metrics, logs, traces, and Kubernetes resources.

Auto generated APM for every service. RED metrics, profiling, incoming and outgoing traces and much more.

Automated issue detection and cost optimization recommendations

All of this in a sub 5 minute install, we promise.

Head to the onboarding documentation to get started.

# System Requirements

Source: https://metoro.io/docs/getting-started/requirements

This page outlines the system requirements for running various Metoro components in your environment.

There are three main components which run in your Kubernetes cluster:

1. **The node agent** - Runs on each node in your Kubernetes cluster

2. **The exporter** - Runs multiple replicas depending on load, scales with horizontal pod autoscaler

3. **The redis cache** - One replica per cluster

Each component has specific resource requirements as detailed below. All requirements are approximate and assume a relatively up to date cpu. Testing is perfomed on AWS M5 instances running on a Skylake 8175M.

The node agents resource requirements scale with several factors, primarily:

* The number of requests being made to / from the node agent

* The number of logs being collected

At a minimum, each node agent requires:

* 0.05 cores

* 100Mi memory

For each additional:

* 1000 requests/second: +0.2 cores CPU, +0.2GB memory, +0.1MB/s network

* 1000 logs/second: +0.05 cores CPU, +0.1GB memory, +0.1MB/s network

### Resource Scaling Table

| Requests per second | Logs per second | CPU (cores) | Memory (GB) | Network (MB/s) |

| ------------------- | --------------- | ----------- | ----------- | -------------- |

| 1000 | 1000 | 0.25 | 0.3 | 0.2 |

| 2000 | 2000 | 0.5 | 0.6 | 0.4 |

| 3000 | 3000 | 0.75 | 0.9 | 0.6 |

| 4000 | 4000 | 1.0 | 1.2 | 0.8 |

| 5000 | 5000 | 1.25 | 1.5 | 1.0 |

These requirements are approximate and may vary based on:

* Number of containers being monitored

* Types of metrics being collected

* Frequency of metric collection

* Network conditions

* Size of individual log lines

The resources required mainly scale with the number of traces / logs being collected across all nodes.

For each additional:

* 1000 traces/second: +0.05 cores CPU, +0.1GB memory, +0.1MB/s network

* 1000 logs/second: +0.02 cores CPU, +0.1GB memory, +0.1MB/s network

### Resource Scaling Table

| Traces per second | Logs per second | CPU (cores) | Memory (GB) | Network (MB/s) |

| ----------------- | --------------- | ----------- | ----------- | -------------- |

| 1000 | 1000 | 0.07 | 0.2 | 0.2 |

| 2000 | 2000 | 0.14 | 0.4 | 0.4 |

| 3000 | 3000 | 0.21 | 0.6 | 0.6 |

| 4000 | 4000 | 0.28 | 0.8 | 0.8 |

| 5000 | 5000 | 0.35 | 1.0 | 1.0 |

These requirements are approximate and may vary based on:

* Number of containers being monitored

* Types of metrics being collected

* Frequency of metric collection

* Network conditions

* Size of individual log lines

Redis is used for temporary storage and caching of metrics data.

Redis has minimal resource requirements:

* 0.05 cores CPU

* 20Mi memory

***

### Example Cluster Configuration

For a cluster with:

* 100 nodes

* 1000 requests per second per node

* 1000 logs per second per node

The resources required are:

* **100 node agents**: 0.25 cores, 0.3GB memory, 0.2MB/s network per node

* **1 cluster exporter**: Processing 100k traces/second, 100k logs/second across all nodes

* Total: 7 cores, 20GB memory, 20MB/s network (split across N replicas)

* **1 redis cache**: 50m cores, 20Mi memory

**Total cluster resources required**

* 32 cores CPU

* 50GB memory

* 40MB/s network

## Default Resource Requests

The default resource requests for the node-agent are:

* 0.3 cores, 300Mi memory

The default resource requests for the exporter are:

* 1 core, 2GB memory

You should tune these requests to meet your specific needs.

# Infrastructure View

Source: https://metoro.io/docs/infrastructure/overview

Monitor and analyze your Kubernetes cluster nodes and their resources

## Overview

The Infrastructure view provides comprehensive monitoring and analysis of all nodes across your Kubernetes clusters. It offers detailed insights into node resources, pod distributions, and system metrics.

## Node Overview

### Node Table

The top section displays a table of all nodes with key information:

* Node names (searchable)

* CPU utilization over selected time period

* Memory usage trends

* Filterable by various attributes

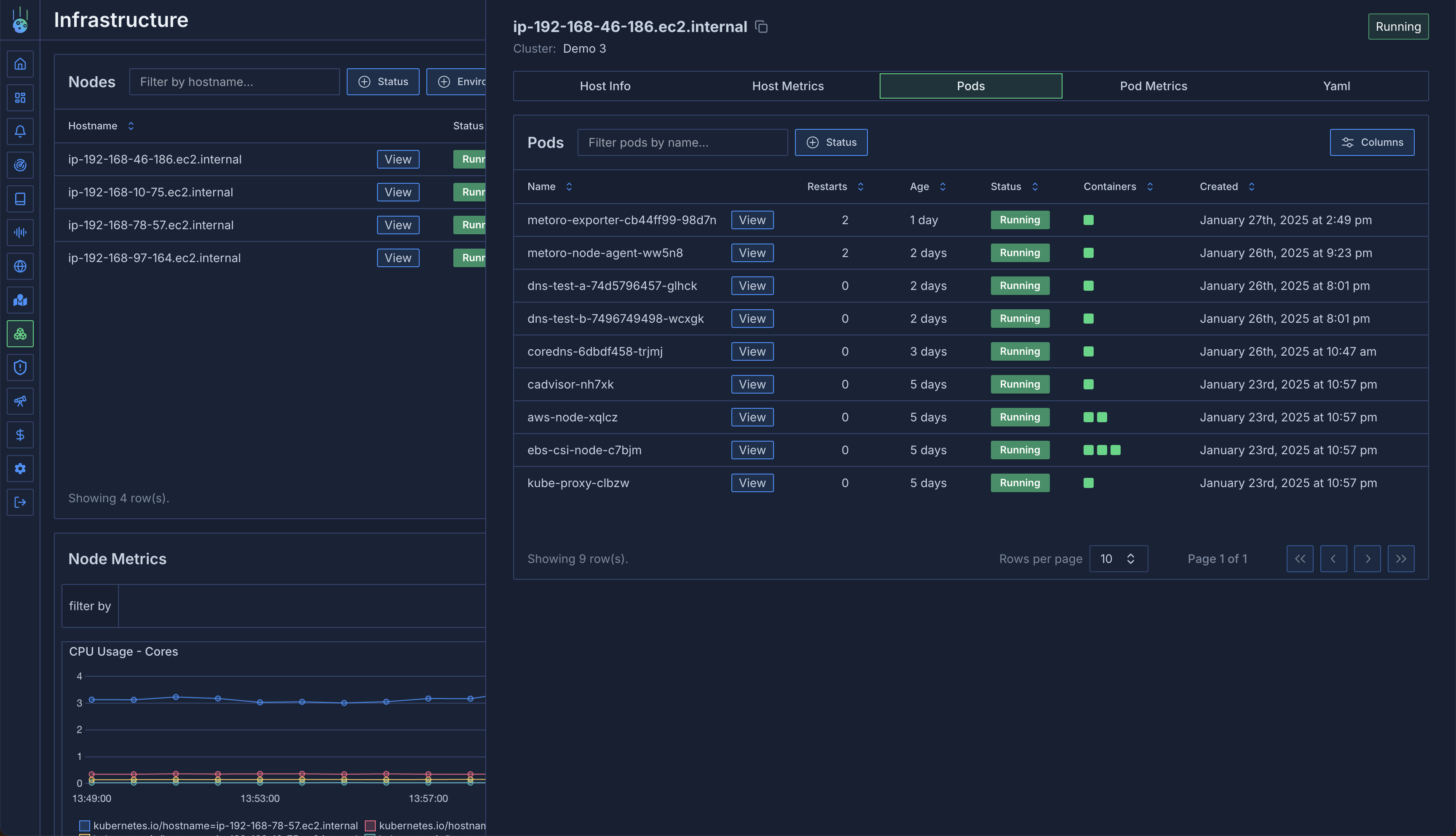

## Node Details

Click on any node to access detailed information across several tabs.

### General Information

* Node capacity

* Available resources

* Kubernetes node metadata

* System information

### Host Metrics

Monitor system-level metrics:

* CPU utilization

* Memory usage

* Disk usage

* Network throughput (transmitted/received bytes)

* Additional system metrics

## Node Details

Click on any node to access detailed information across several tabs.

### General Information

* Node capacity

* Available resources

* Kubernetes node metadata

* System information

### Host Metrics

Monitor system-level metrics:

* CPU utilization

* Memory usage

* Disk usage

* Network throughput (transmitted/received bytes)

* Additional system metrics

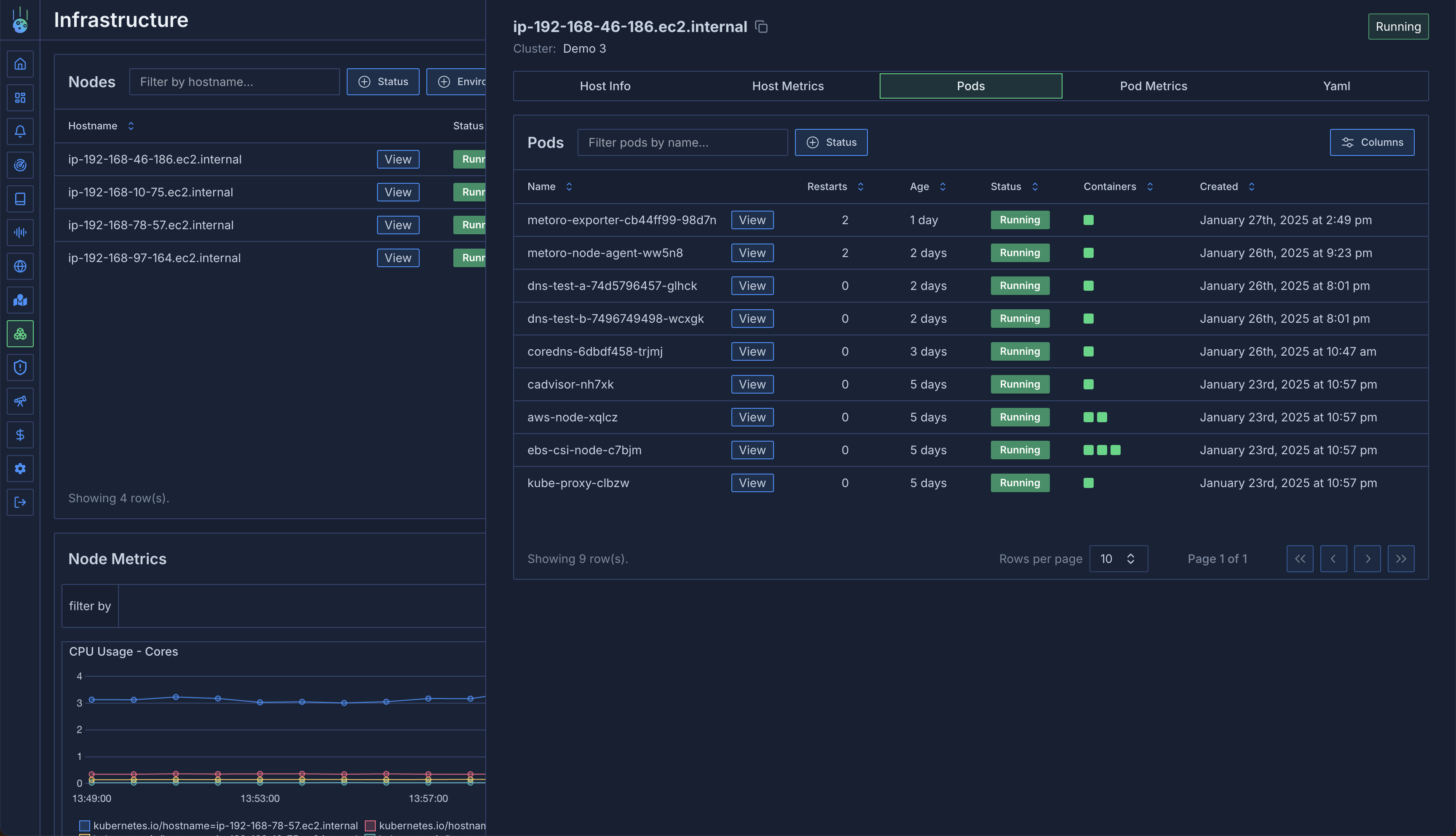

### Pods View

A detailed table of all pods running on the node:

* Restart frequency

* Uptime

* Pod status and conditions

* Resource usage

### Pods View

A detailed table of all pods running on the node:

* Restart frequency

* Uptime

* Pod status and conditions

* Resource usage

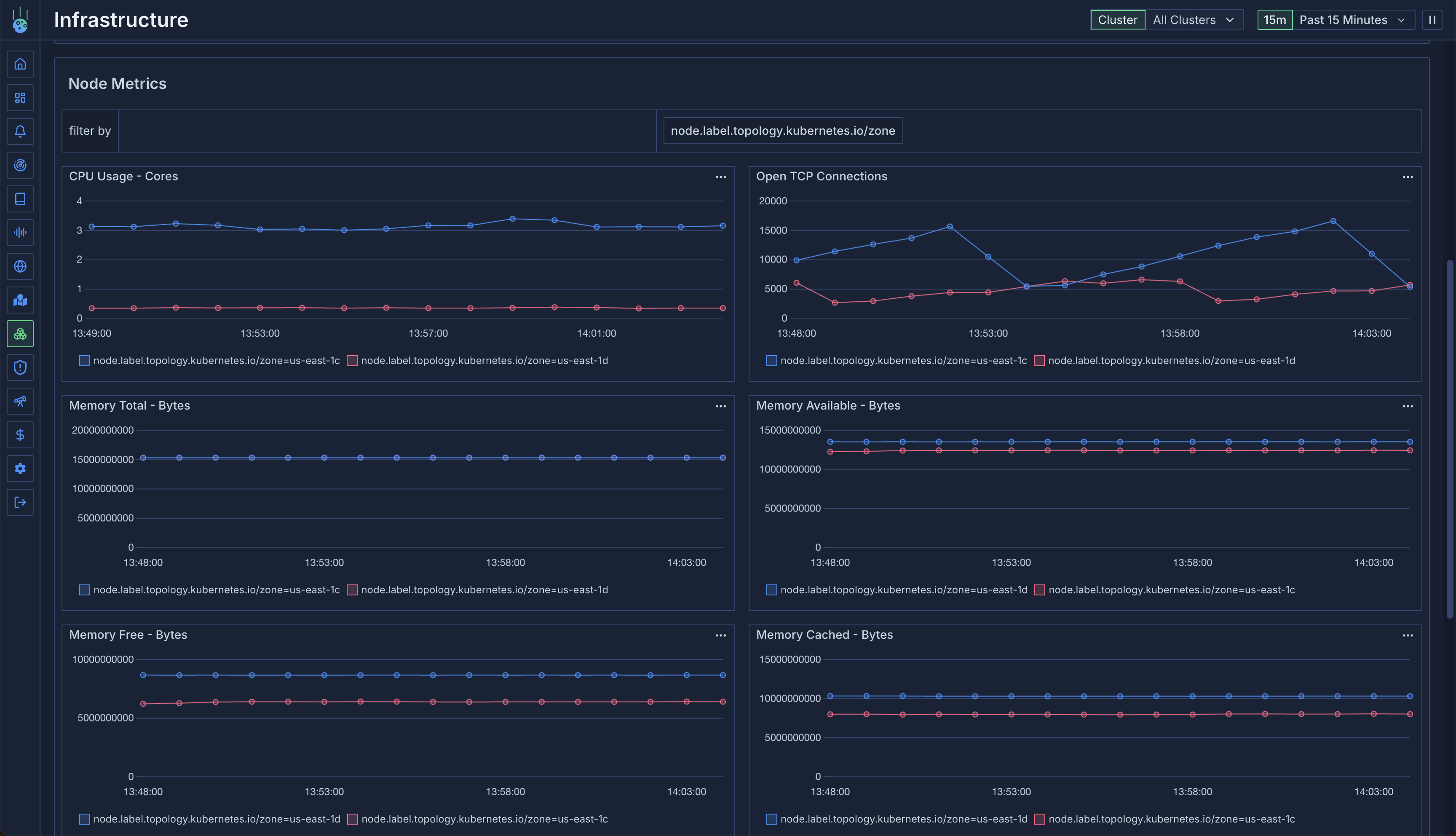

### Pod Metrics

Aggregate metrics for pods on the node:

* CPU usage per pod

* Memory consumption

* Resource distribution

* Performance analysis

This view is particularly useful for: